Weakly Supervised Faster-RCNN+FPN to classify animals in camera trap images

Paper and Code

Aug 30, 2022

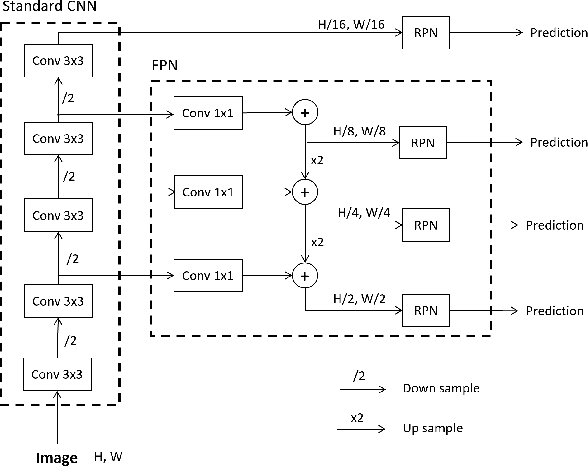

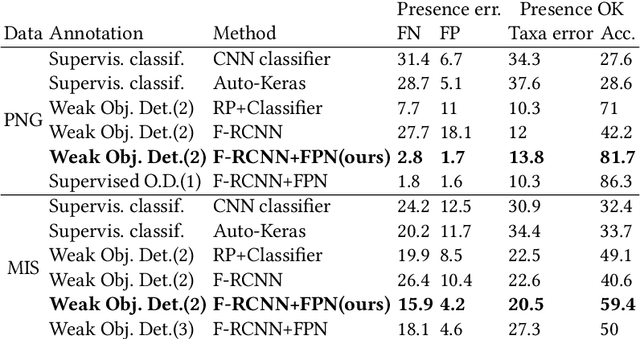

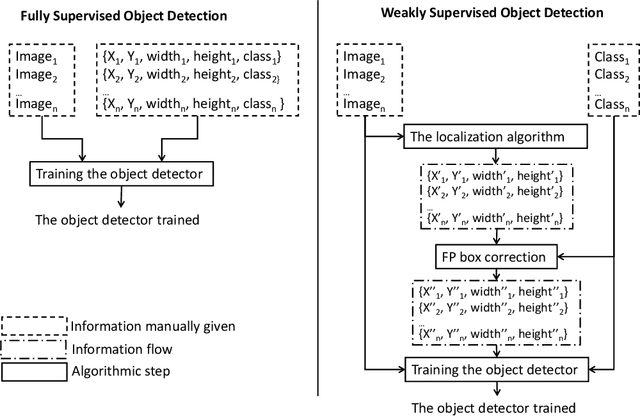

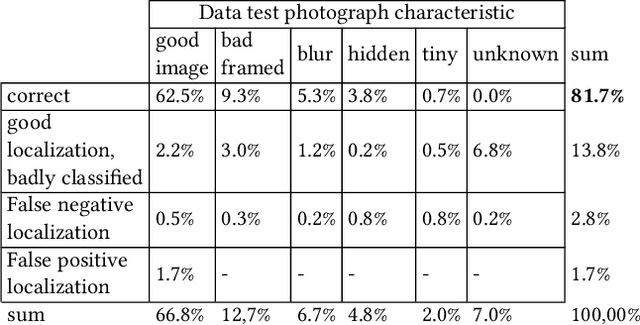

Camera traps have revolutionized the animal research of many species that were previously nearly impossible to observe due to their habitat or behavior. They are cameras generally fixed to a tree that take a short sequence of images when triggered. Deep learning has the potential to overcome the workload to automate image classification according to taxon or empty images. However, a standard deep neural network classifier fails because animals often represent a small portion of the high-definition images. That is why we propose a workflow named Weakly Object Detection Faster-RCNN+FPN which suits this challenge. The model is weakly supervised because it requires only the animal taxon label per image but doesn't require any manual bounding box annotations. First, it automatically performs the weakly-supervised bounding box annotation using the motion from multiple frames. Then, it trains a Faster-RCNN+FPN model using this weak supervision. Experimental results have been obtained with two datasets from a Papua New Guinea and Missouri biodiversity monitoring campaign, then on an easily reproducible testbed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge