Voice Aging with Audio-Visual Style Transfer

Paper and Code

Oct 05, 2021

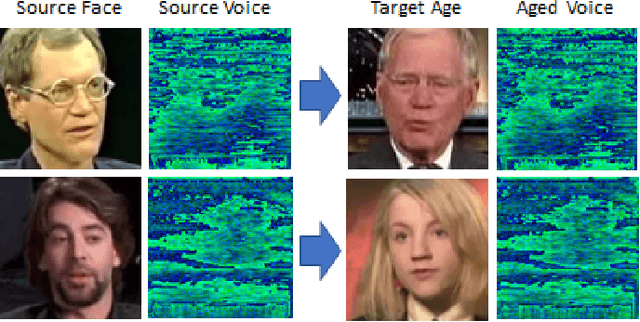

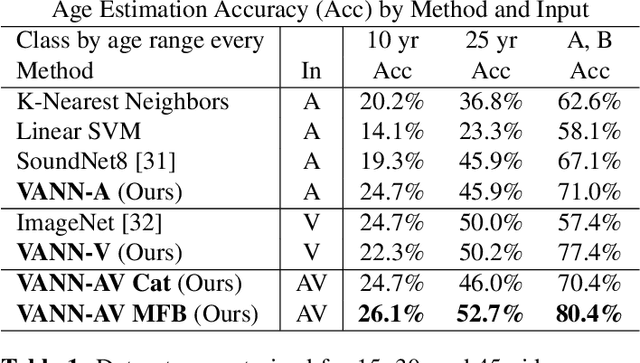

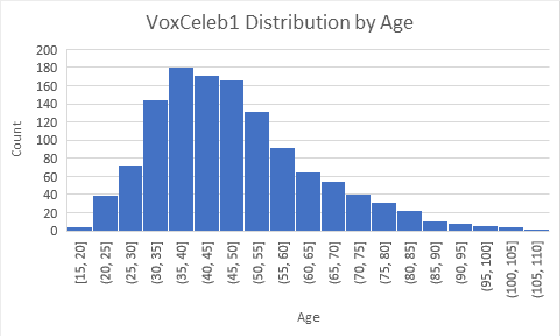

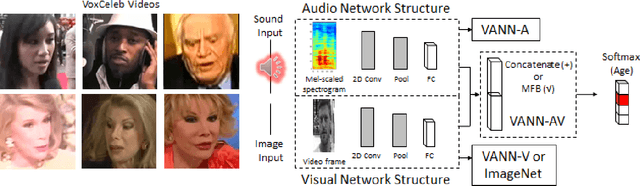

Face aging techniques have used generative adversarial networks (GANs) and style transfer learning to transform one's appearance to look younger/older. Identity is maintained by conditioning these generative networks on a learned vector representation of the source content. In this work, we apply a similar approach to age a speaker's voice, referred to as voice aging. We first analyze the classification of a speaker's age by training a convolutional neural network (CNN) on the speaker's voice and face data from Common Voice and VoxCeleb datasets. We generate aged voices from style transfer to transform an input spectrogram to various ages and demonstrate our method on a mobile app.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge