Vision-Language Modeling with Regularized Spatial Transformer Networks for All Weather Crosswind Landing of Aircraft

Paper and Code

May 09, 2024

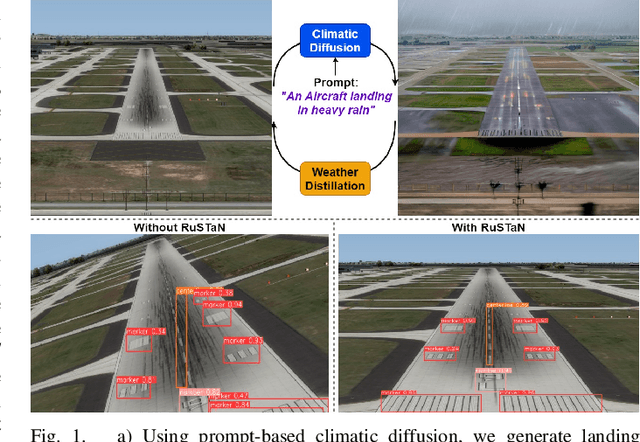

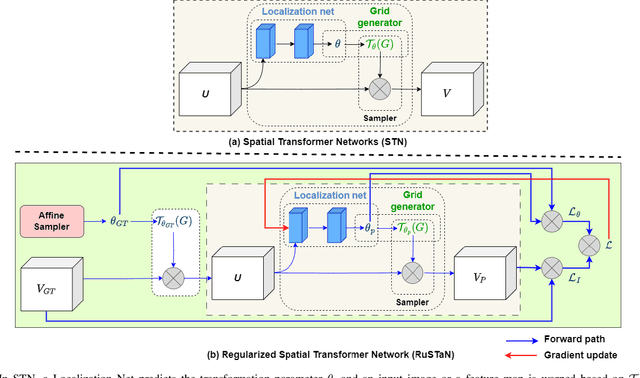

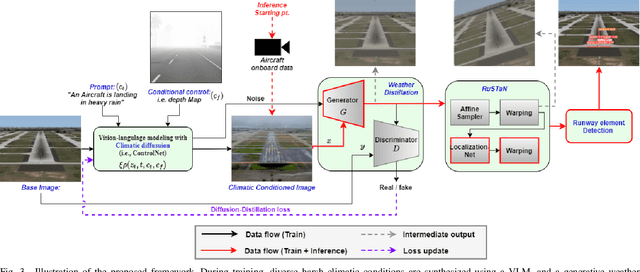

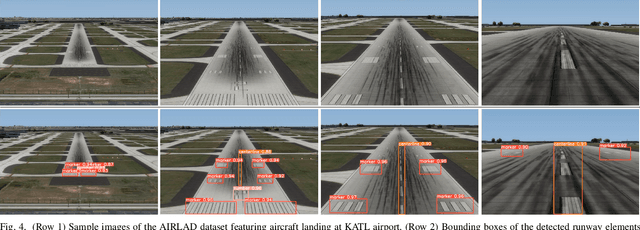

The intrinsic capability to perceive depth of field and extract salient information by the Human Vision System (HVS) stimulates a pilot to perform manual landing over an autoland approach. However, harsh weather creates visibility hindrances, and a pilot must have a clear view of runway elements before the minimum decision altitude. To help a pilot in manual landing, a vision-based system tailored to localize runway elements likewise gets affected, especially during crosswind due to the projective distortion of aircraft camera images. To combat this, we propose to integrate a prompt-based climatic diffusion network with a weather distillation model using a novel diffusion-distillation loss. Precisely, the diffusion model synthesizes climatic-conditioned landing images, and the weather distillation model learns inverse mapping by clearing those visual degradations. Then, to tackle the crosswind landing scenario, a novel Regularized Spatial Transformer Networks (RuSTaN) learns to accurately calibrate for projective distortion using self-supervised learning, which minimizes localization error by the downstream runway object detector. Finally, we have simulated a clear-day landing scenario at the busiest airport globally to curate an image-based Aircraft Landing Dataset (AIRLAD) and experimentally validated our contributions using this dataset to benchmark the performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge