Video Object Segmentation using Tracked Object Proposals

Paper and Code

Jul 20, 2017

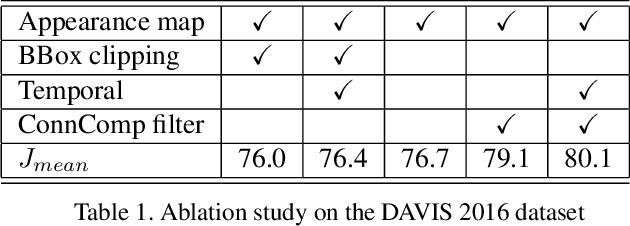

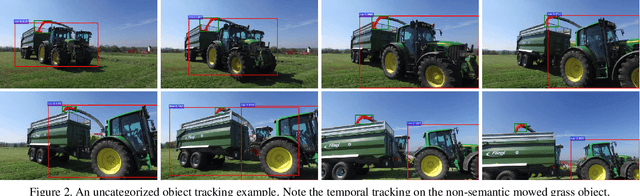

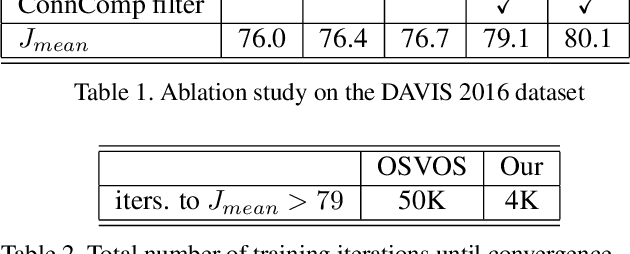

We present an approach to semi-supervised video object segmentation, in the context of the DAVIS 2017 challenge. Our approach combines category-based object detection, category-independent object appearance segmentation and temporal object tracking. We are motivated by the fact that the objects semantic category tends not to change throughout the video while its appearance and location can vary considerably. In order to capture the specific object appearance independent of its category, for each video we train a fully convolutional network using augmentations of the given annotated frame. We refine the appearance segmentation mask with the bounding boxes provided either by a semantic object detection network, when applicable, or by a previous frame prediction. By introducing a temporal continuity constraint on the detected boxes, we are able to improve the object segmentation mask of the appearance network and achieve competitive results on the DAVIS datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge