Video Captioning in Compressed Video

Paper and Code

Jan 02, 2021

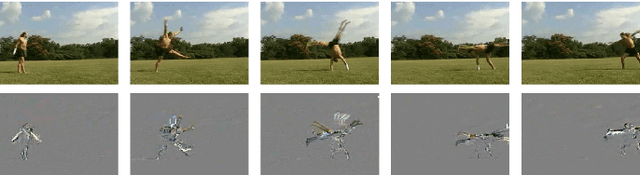

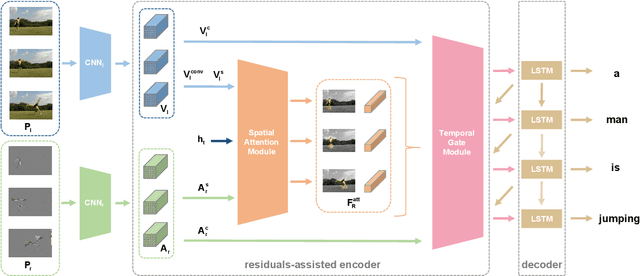

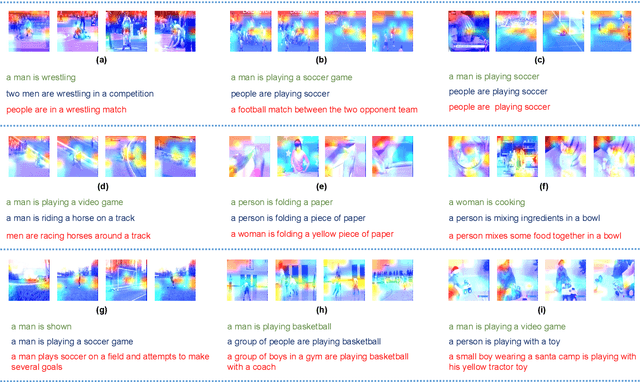

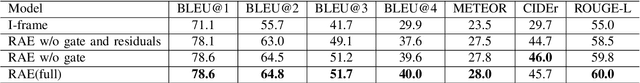

Existing approaches in video captioning concentrate on exploring global frame features in the uncompressed videos, while the free of charge and critical saliency information already encoded in the compressed videos is generally neglected. We propose a video captioning method which operates directly on the stored compressed videos. To learn a discriminative visual representation for video captioning, we design a residuals-assisted encoder (RAE), which spots regions of interest in I-frames under the assistance of the residuals frames. First, we obtain the spatial attention weights by extracting features of residuals as the saliency value of each location in I-frame and design a spatial attention module to refine the attention weights. We further propose a temporal gate module to determine how much the attended features contribute to the caption generation, which enables the model to resist the disturbance of some noisy signals in the compressed videos. Finally, Long Short-Term Memory is utilized to decode the visual representations into descriptions. We evaluate our method on two benchmark datasets and demonstrate the effectiveness of our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge