Utilizing Skipped Frames in Action Repeats via Pseudo-Actions

Paper and Code

May 07, 2021

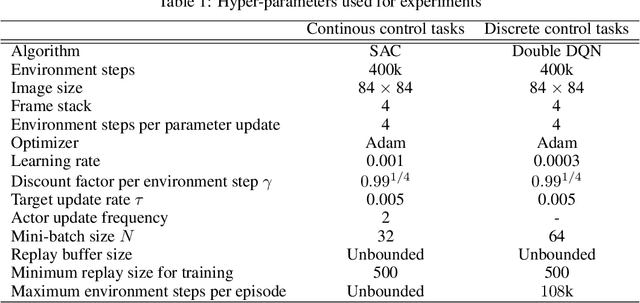

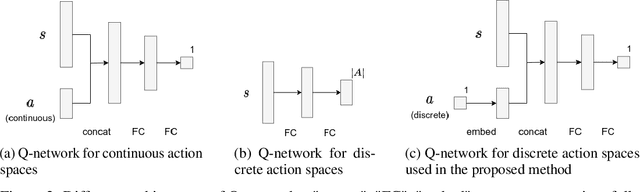

In many deep reinforcement learning settings, when an agent takes an action, it repeats the same action a predefined number of times without observing the states until the next action-decision point. This technique of action repetition has several merits in training the agent, but the data between action-decision points (i.e., intermediate frames) are, in effect, discarded. Since the amount of training data is inversely proportional to the interval of action repeats, they can have a negative impact on the sample efficiency of training. In this paper, we propose a simple but effective approach to alleviate to this problem by introducing the concept of pseudo-actions. The key idea of our method is making the transition between action-decision points usable as training data by considering pseudo-actions. Pseudo-actions for continuous control tasks are obtained as the average of the action sequence straddling an action-decision point. For discrete control tasks, pseudo-actions are computed from learned action embeddings. This method can be combined with any model-free reinforcement learning algorithm that involves the learning of Q-functions. We demonstrate the effectiveness of our approach on both continuous and discrete control tasks in OpenAI Gym.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge