Using LSTM and SARIMA Models to Forecast Cluster CPU Usage

Paper and Code

Jul 16, 2020

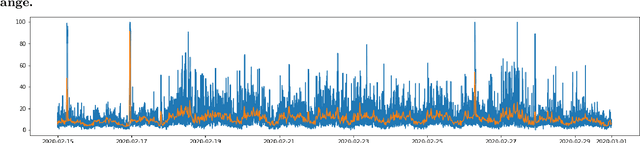

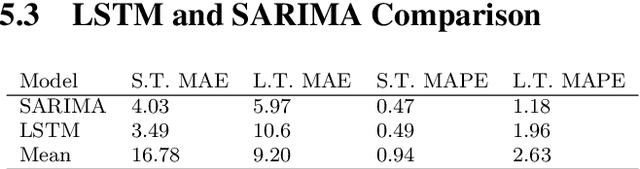

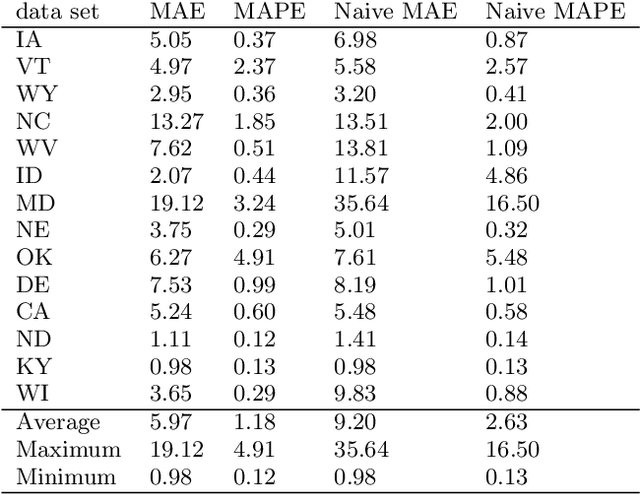

As large scale cloud computing centers become more popular than individual servers, predicting future resource demand need has become an important problem. Forecasting resource need allows public cloud providers to proactively allocate or deallocate resources for cloud services. This work seeks to predict one resource, CPU usage, over both a short term and long term time scale. To gain insight into the model characteristics that best support specific tasks, we consider two vastly different architectures: the historically relevant SARIMA model and the more modern neural network, LSTM model. We apply these models to Azure data resampled to 20 minutes per data point with the goal of predicting usage over the next hour for the short-term task and for the next three days for the long-term task. The SARIMA model outperformed the LSTM for the long term prediction task, but performed poorer on the short term task. Furthermore, the LSTM model was more robust, whereas the SARIMA model relied on the data meeting certain assumptions about seasonality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge