Using an AI creativity system to explore how aesthetic experiences are processed along the brains perceptual neural pathways

Paper and Code

Sep 15, 2019

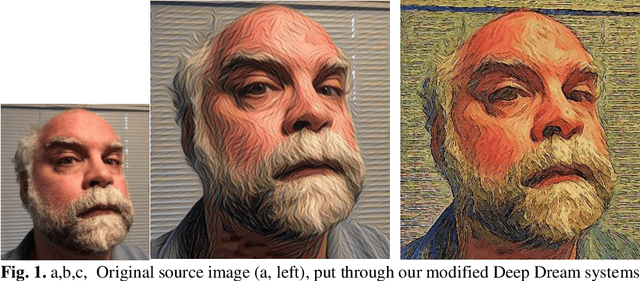

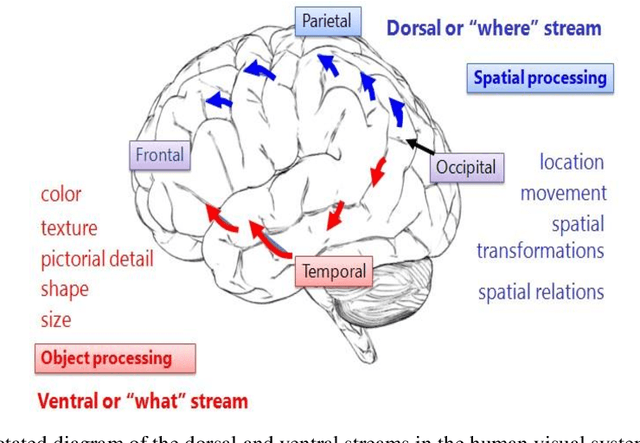

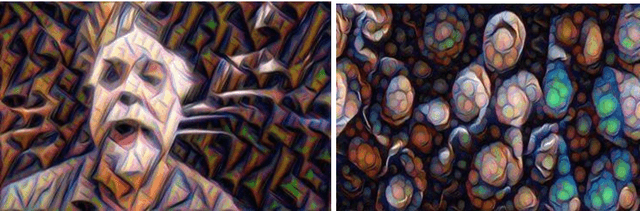

With the increased sophistication of AI techniques, the application of these systems has been expanding to ever newer fields. Increasingly, these systems are being used in modeling of human aesthetics and creativity, e.g. how humans create artworks and design products. Our lab has developed one such AI creativity deep learning system that can be used to create artworks in the form of images and videos. In this paper, we describe this system and its use in studying the human visual system and the formation of aesthetic experiences. Specifically, we show how time-based AI created media can be used to explore the nature of the dual-pathway neuro-architecture of the human visual system and how this relates to higher cognitive judgments such as aesthetic experiences that rely on these divergent information streams. We propose a theoretical framework for how the movement within percepts such as video clips, causes the engagement of reflexive attention and a subsequent focus on visual information that are primarily processed via the dorsal stream, thereby modulating aesthetic experiences that rely on information relayed via the ventral stream. We outline our recent study in support of our proposed framework, which serves as the first study that investigates the relationship between the two visual streams and aesthetic experiences.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge