Unsupervised word sense disambiguation in dynamic semantic spaces

Paper and Code

Feb 16, 2018

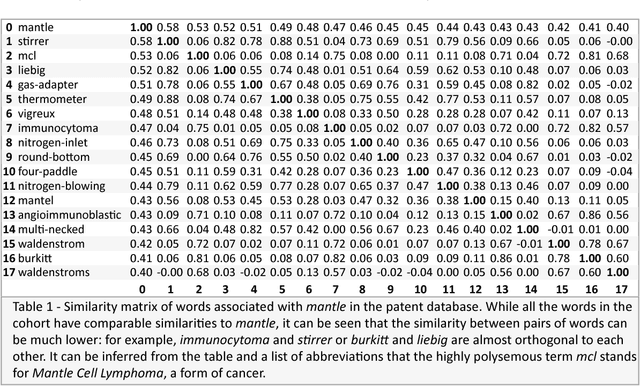

In this paper, we are mainly concerned with the ability to quickly and automatically distinguish word senses in dynamic semantic spaces in which new terms and new senses appear frequently. Such spaces are built '"on the fly" from constantly evolving data sets such as Wikipedia, repositories of patent grants and applications, or large sets of legal documents for Technology Assisted Review and e-discovery. This immediacy rules out supervision as well as the use of a priori training sets. We show that the various senses of a term can be automatically made apparent with a simple clustering algorithm, each sense being a vector in the semantic space. While we only consider here semantic spaces built by using random vectors, this algorithm should work with any kind of embedding, provided meaningful similarities between terms can be computed and do fulfill at least the two basic conditions that terms which close meanings have high similarities and terms with unrelated meanings have near-zero similarities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge