Unsupervised Domain Adaptation Approaches for Chessboard Recognition

Paper and Code

Oct 19, 2024

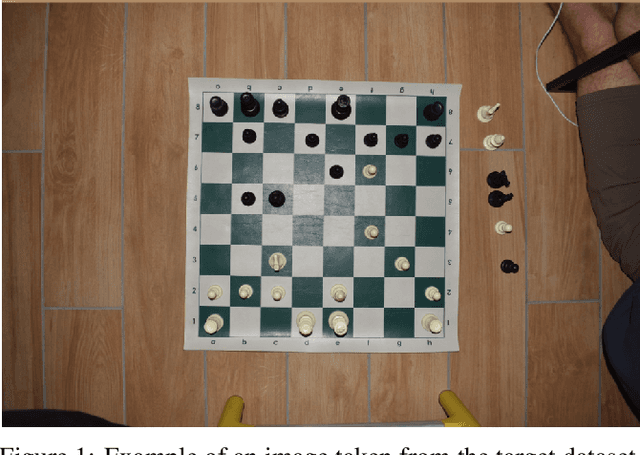

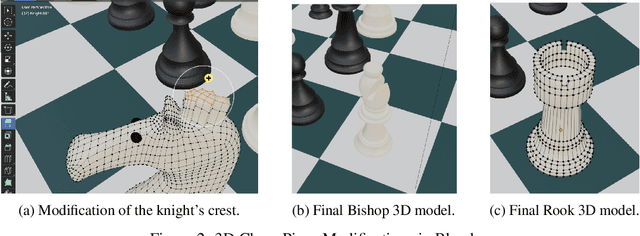

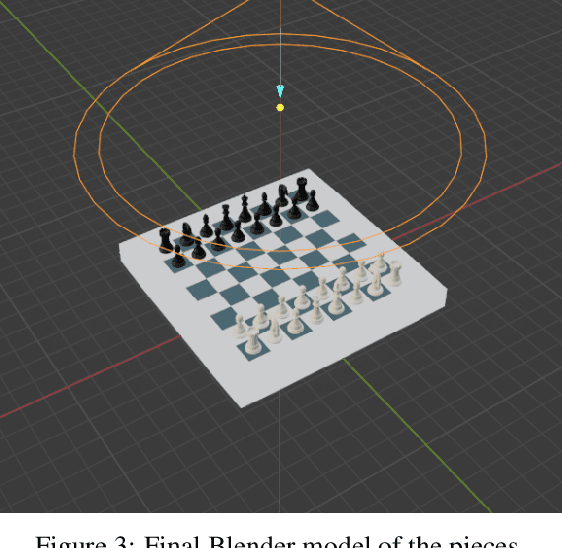

Chess involves extensive study and requires players to keep manual records of their matches, a process which is time-consuming and distracting. The lack of high-quality labeled photographs of chess boards, and the tediousness of manual labeling, have hindered the wide application of Deep Learning (DL) to automating this record-keeping process. This paper proposes an end-to-end pipeline that employs domain adaptation (DA) to predict the labels of real, top-view, unlabeled chessboard images using synthetic, labeled images. The pipeline is composed of a pre-processing phase which detects the board, crops the individual squares, and feeds them one at a time to a DL model. The model then predicts the labels of the squares and passes the ordered predictions to a post-processing pipeline which generates the Forsyth-Edwards Notation (FEN) of the position. The three approaches considered are the following: A VGG16 model pre-trained on ImageNet, defined here as the Base-Source model, fine-tuned to predict source domain squares and then used to predict target domain squares without any domain adaptation; an improved version of the Base-Source model which applied CORAL loss to some of the final fully connected layers of the VGG16 to implement DA; and a Domain Adversarial Neural Network (DANN) which used the adversarial training of a domain discriminator to perform the DA. Also, although we opted not to use the labels of the target domain for this study, we trained a baseline with the same architecture as the Base-Source model (Named Base-Target) directly on the target domain in order to get an upper bound on the performance achievable through domain adaptation. The results show that the DANN model only results in a 3% loss in accuracy when compared to the Base-Target model while saving all the effort required to label the data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge