Understanding Regularisation Methods for Continual Learning

Paper and Code

Jun 11, 2020

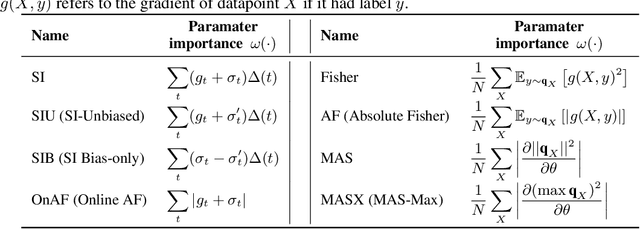

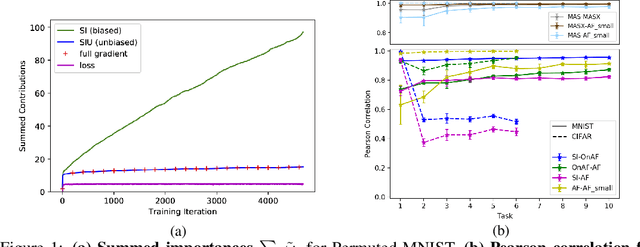

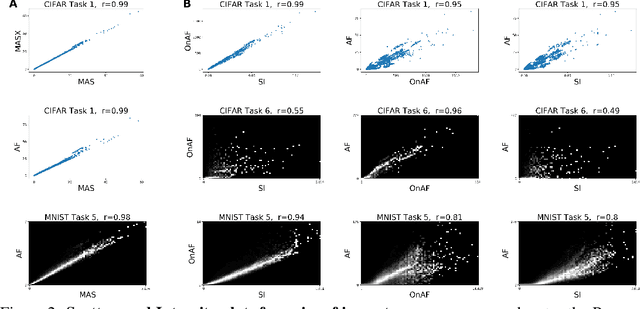

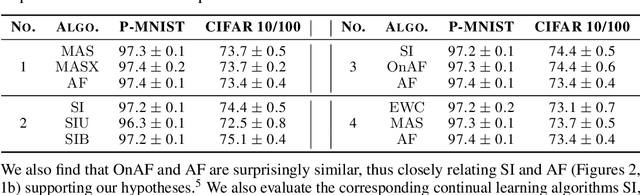

The problem of Catastrophic Forgetting has received a lot of attention in the past years. An important class of proposed solutions are so-called regularisation approaches, which protect weights from large changes according to their importances. Various ways to measure this importance have been put forward, all stemming from different theoretical or intuitive motivations. We present mathematical and empirical evidence that two of these methods -- Synaptic Intelligence and Memory Aware Synapses -- approximate a rescaled version of the Fisher Information, a theoretically justified importance measure also used in the literature. As part of our methods, we show that the importance approximation of Synaptic Intelligence is biased and that, in fact, this bias explains its performance best. Altogether, our results offer a theoretical account for the effectiveness of different regularisation approaches and uncover similarities between the methods proposed so far.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge