Understanding Arithmetic Reasoning in Language Models using Causal Mediation Analysis

Paper and Code

May 24, 2023

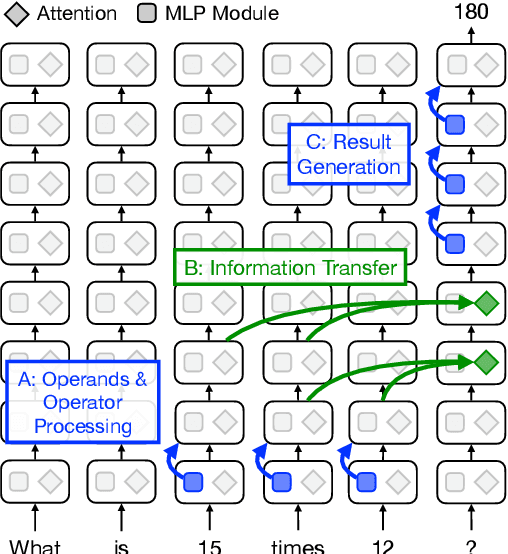

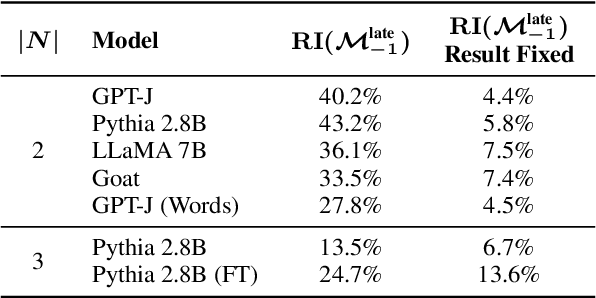

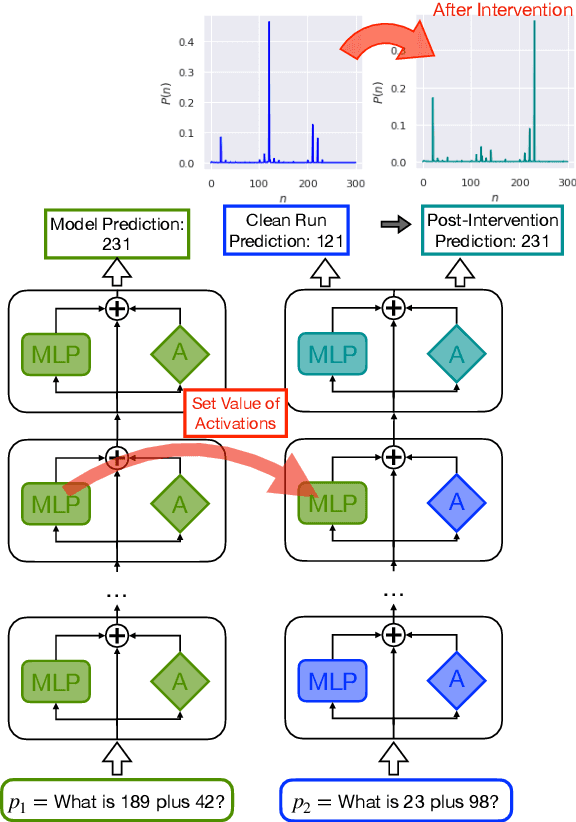

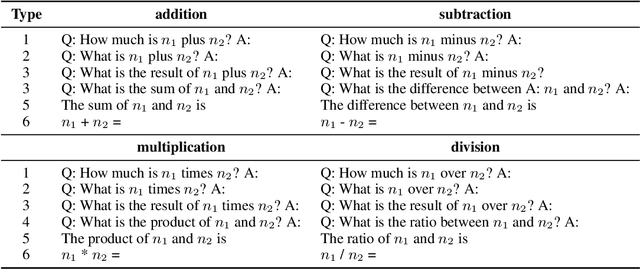

Mathematical reasoning in large language models (LLMs) has garnered attention in recent research, but there is limited understanding of how these models process and store information related to arithmetic tasks. In this paper, we present a mechanistic interpretation of LLMs for arithmetic-based questions using a causal mediation analysis framework. By intervening on the activations of specific model components and measuring the resulting changes in predicted probabilities, we identify the subset of parameters responsible for specific predictions. We analyze two pre-trained language models with different sizes (2.8B and 6B parameters). Experimental results reveal that a small set of mid-late layers significantly affect predictions for arithmetic-based questions, with distinct activation patterns for correct and wrong predictions. We also investigate the role of the attention mechanism and compare the model's activation patterns for arithmetic queries with the prediction of factual knowledge. Our findings provide insights into the mechanistic interpretation of LLMs for arithmetic tasks and highlight the specific components involved in arithmetic reasoning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge