UAV Target Tracking in Urban Environments Using Deep Reinforcement Learning

Paper and Code

Jul 21, 2020

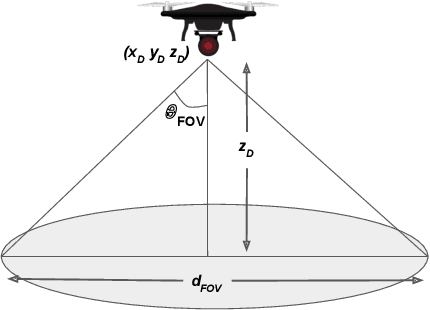

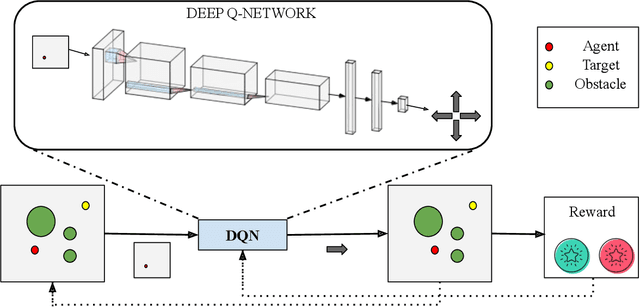

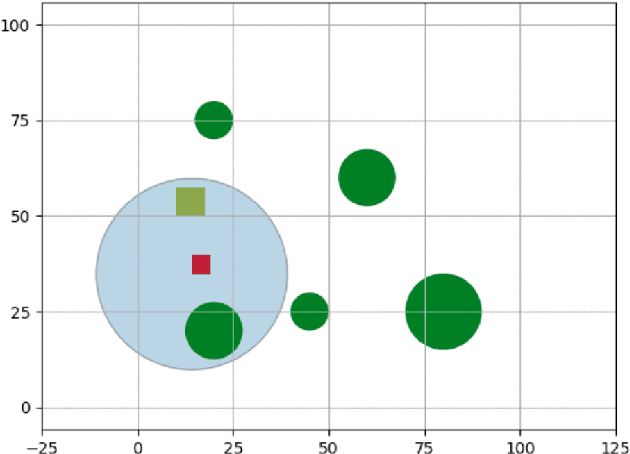

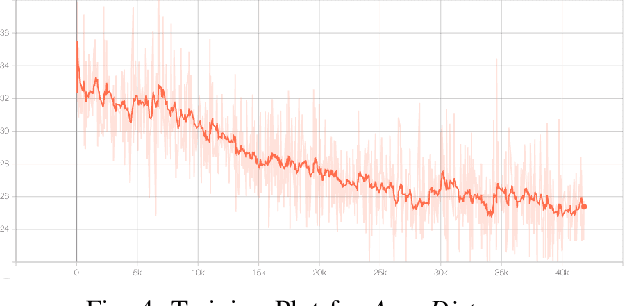

Persistent target tracking in urban environments using UAV is a difficult task due to the limited field of view, visibility obstruction from obstacles and uncertain target motion. The vehicle needs to plan intelligently in 3D such that the target visibility is maximized. In this paper, we introduce Target Following DQN (TF-DQN), a deep reinforcement learning technique based on Deep Q-Networks with a curriculum training framework for the UAV to persistently track the target in the presence of obstacles and target motion uncertainty. The algorithm is evaluated through several simulation experiments qualitatively as well as quantitatively. The results show that the UAV tracks the target persistently in diverse environments while avoiding obstacles on the trained environments as well as on unseen environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge