Tutorial: Modern Theoretical Tools for Understanding and Designing Next-generation Information Retrieval System

Paper and Code

Mar 26, 2022

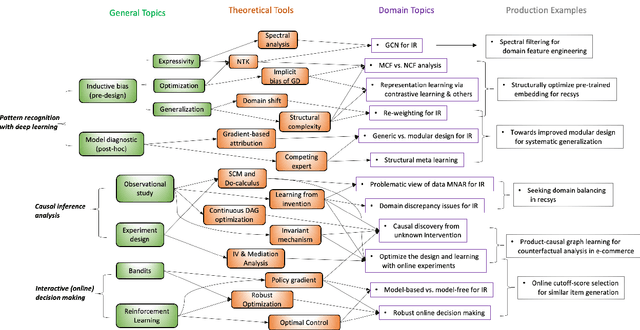

In the relatively short history of machine learning, the subtle balance between engineering and theoretical progress has been proved critical at various stages. The most recent wave of AI has brought to the IR community powerful techniques, particularly for pattern recognition. While many benefits from the burst of ideas as numerous tasks become algorithmically feasible, the balance is tilting toward the application side. The existing theoretical tools in IR can no longer explain, guide, and justify the newly-established methodologies. The consequences can be suffering: in stark contrast to how the IR industry has envisioned modern AI making life easier, many are experiencing increased confusion and costs in data manipulation, model selection, monitoring, censoring, and decision making. This reality is not surprising: without handy theoretical tools, we often lack principled knowledge of the pattern recognition model's expressivity, optimization property, generalization guarantee, and our decision-making process has to rely on over-simplified assumptions and human judgments from time to time. Time is now to bring the community a systematic tutorial on how we successfully adapt those tools and make significant progress in understanding, designing, and eventually productionize impactful IR systems. We emphasize systematicity because IR is a comprehensive discipline that touches upon particular aspects of learning, causal inference analysis, interactive (online) decision-making, etc. It thus requires systematic calibrations to render the actual usefulness of the imported theoretical tools to serve IR problems, as they usually exhibit unique structures and definitions. Therefore, we plan this tutorial to systematically demonstrate our learning and successful experience of using advanced theoretical tools for understanding and designing IR systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge