Triangle-Net: Towards Robustness in Point Cloud Classification

Paper and Code

Feb 27, 2020

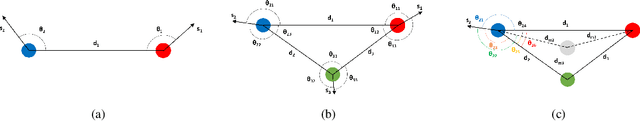

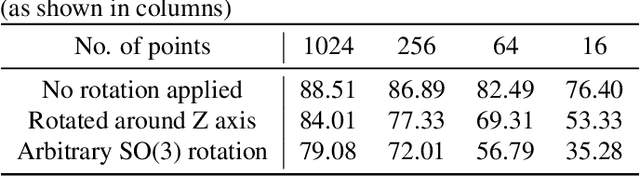

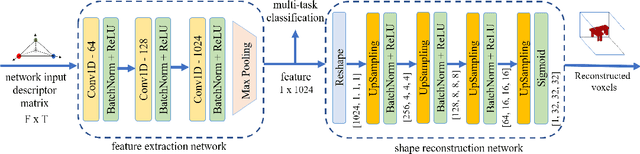

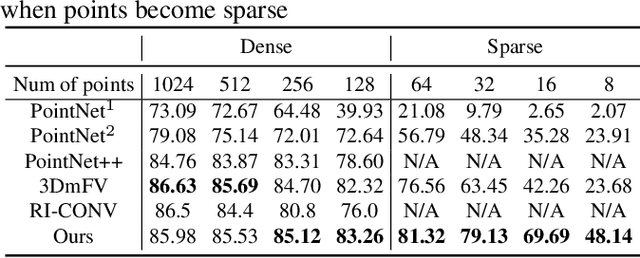

3D object recognition is becoming a key desired capability for many computer vision systems such as autonomous vehicles, service robots and surveillance drones to operate more effectively in unstructured environments. These real-time systems require effective classification methods that are robust to sampling resolution, measurement noise, and pose configuration of the objects. Previous research has shown that sparsity, rotation and positional variance of points can lead to a significant drop in the performance of point cloud based classification techniques. In this regard, we propose a novel approach for 3D classification that takes sparse point clouds as input and learns a model that is robust to rotational and positional variance as well as point sparsity. To this end, we introduce new feature descriptors which are fed as an input to our proposed neural network in order to learn a robust latent representation of the 3D object. We show that such latent representations can significantly improve the performance of object classification and retrieval. Further, we show that our approach outperforms PointNet and 3DmFV by 34.4% and 27.4% respectively in classification tasks using sparse point clouds of only 16 points under arbitrary SO(3) rotation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge