Trees-Based Models for Correlated Data

Paper and Code

Feb 16, 2021

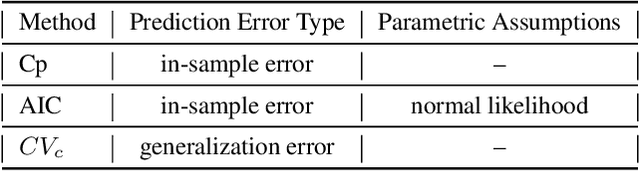

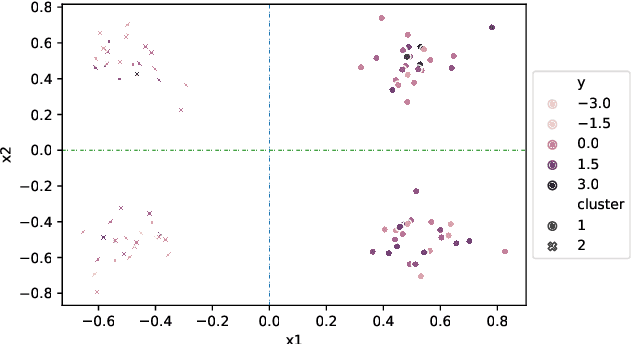

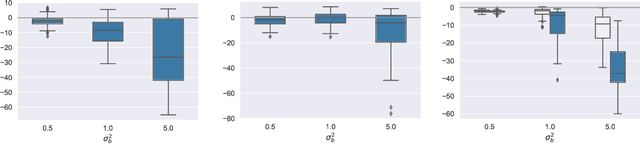

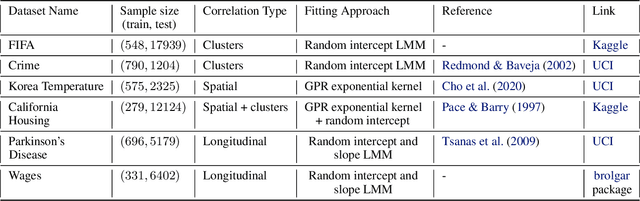

This paper presents a new approach for trees-based regression, such as simple regression tree, random forest and gradient boosting, in settings involving correlated data. We show the problems that arise when implementing standard trees-based regression models, which ignore the correlation structure. Our new approach explicitly takes the correlation structure into account in the splitting criterion, stopping rules and fitted values in the leaves, which induces some major modifications of standard methodology. The superiority of our new approach over trees-based models that do not account for the correlation is supported by simulation experiments and real data analyses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge