Treepedia 2.0: Applying Deep Learning for Large-scale Quantification of Urban Tree Cover

Paper and Code

Aug 14, 2018

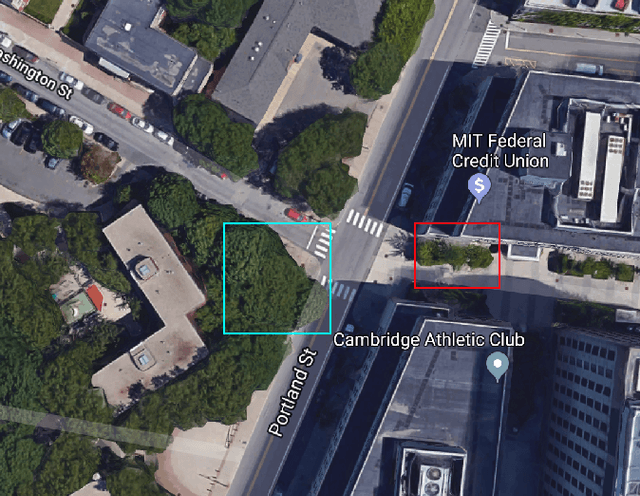

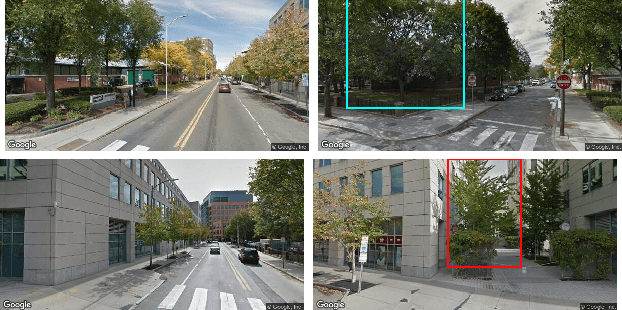

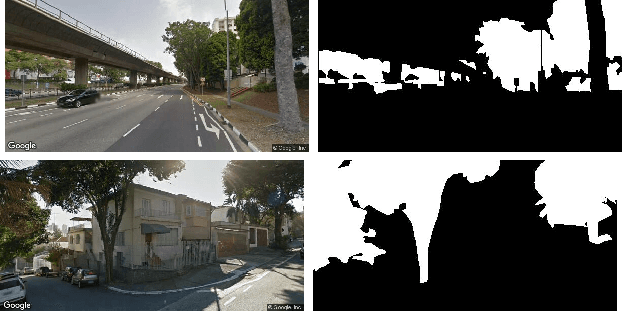

Recent advances in deep learning have made it possible to quantify urban metrics at fine resolution, and over large extents using street-level images. Here, we focus on measuring urban tree cover using Google Street View (GSV) images. First, we provide a small-scale labelled validation dataset and propose standard metrics to compare the performance of automated estimations of street tree cover using GSV. We apply state-of-the-art deep learning models, and compare their performance to a previously established benchmark of an unsupervised method. Our training procedure for deep learning models is novel; we utilize the abundance of openly available and similarly labelled street-level image datasets to pre-train our model. We then perform additional training on a small training dataset consisting of GSV images. We find that deep learning models significantly outperform the unsupervised benchmark method. Our semantic segmentation model increased mean intersection-over-union (IoU) from 44.10% to 60.42% relative to the unsupervised method and our end-to-end model decreased Mean Absolute Error from 10.04% to 4.67%. We also employ a recently developed method called gradient-weighted class activation map (Grad-CAM) to interpret the features learned by the end-to-end model. This technique confirms that the end-to-end model has accurately learned to identify tree cover area as key features for predicting percentage tree cover. Our paper provides an example of applying advanced deep learning techniques on a large-scale, geo-tagged and image-based dataset to efficiently estimate important urban metrics. The results demonstrate that deep learning models are highly accurate, can be interpretable, and can also be efficient in terms of data-labelling effort and computational resources.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge