Traversing Latent Space using Decision Ferns

Paper and Code

Dec 06, 2018

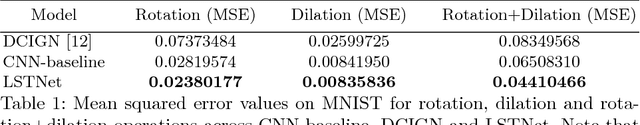

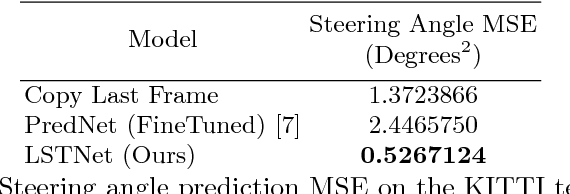

The practice of transforming raw data to a feature space so that inference can be performed in that space has been popular for many years. Recently, rapid progress in deep neural networks has given both researchers and practitioners enhanced methods that increase the richness of feature representations, be it from images, text or speech. In this work we show how a constructed latent space can be explored in a controlled manner and argue that this complements well founded inference methods. For constructing the latent space a Variational Autoencoder is used. We present a novel controller module that allows for smooth traversal in the latent space and construct an end-to-end trainable framework. We explore the applicability of our method for performing spatial transformations as well as kinematics for predicting future latent vectors of a video sequence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge