Transmission Line Parameter Estimation Under Non-Gaussian Measurement Noise

Paper and Code

Aug 28, 2022

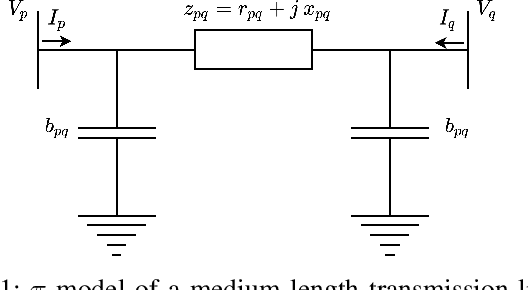

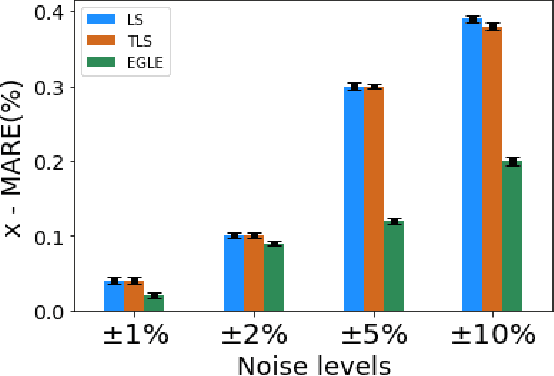

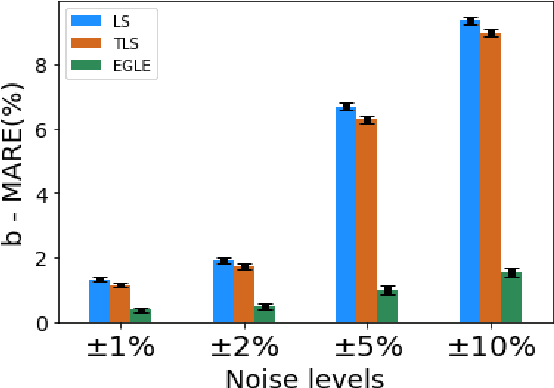

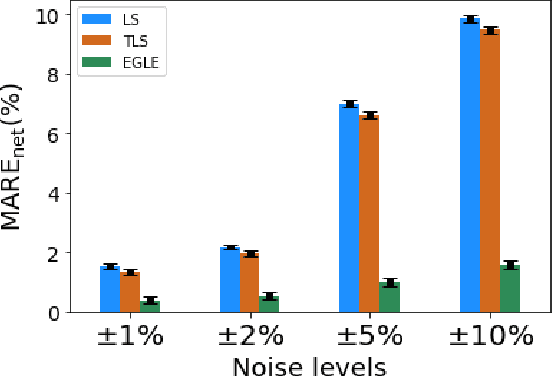

Accurate knowledge of transmission line parameters is essential for a variety of power system monitoring, protection, and control applications. The use of phasor measurement unit (PMU) data for transmission line parameter estimation (TLPE) is well-documented. However, existing literature on PMU-based TLPE implicitly assumes the measurement noise to be Gaussian. Recently, it has been shown that the noise in PMU measurements (especially in the current phasors) is better represented by Gaussian mixture models (GMMs), i.e., the noises are non-Gaussian. We present a novel approach for TLPE that can handle non-Gaussian noise in the PMU measurements. The measurement noise is expressed as a GMM, whose components are identified using the expectation-maximization (EM) algorithm. Subsequently, noise and parameter estimation is carried out by solving a maximum likelihood estimation problem iteratively until convergence. The superior performance of the proposed approach over traditional approaches such as least squares and total least squares as well as the more recently proposed minimum total error entropy approach is demonstrated by performing simulations using the IEEE 118-bus system as well as proprietary PMU data obtained from a U.S. power utility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge