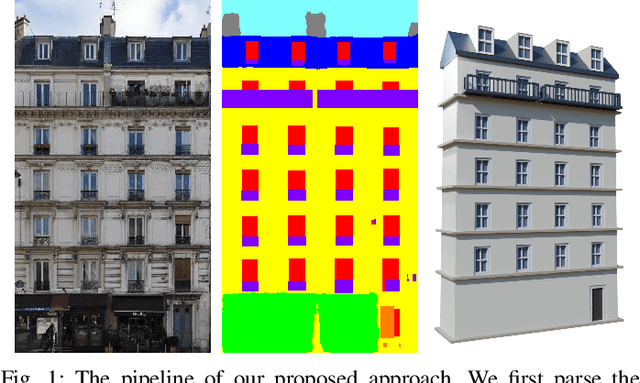

Translational Symmetry-Aware Facade Parsing for 3D Building Reconstruction

Paper and Code

Jun 02, 2021

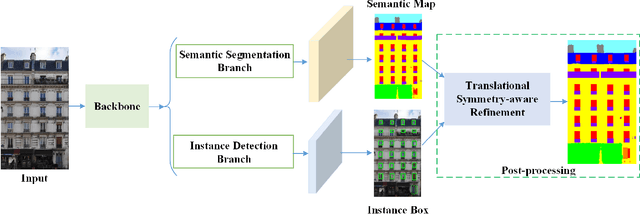

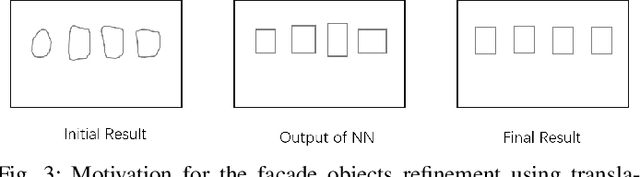

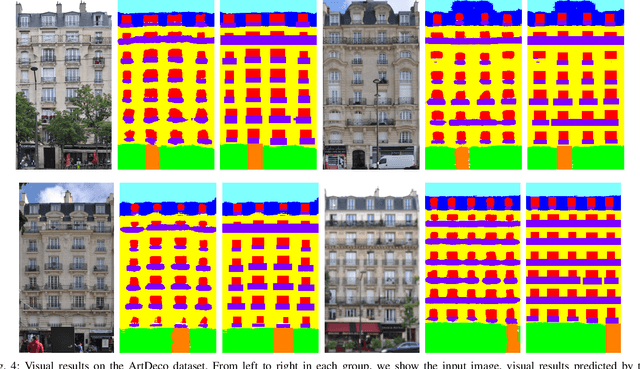

Effectively parsing the facade is essential to 3D building reconstruction, which is an important computer vision problem with a large amount of applications in high precision map for navigation, computer aided design, and city generation for digital entertainments. To this end, the key is how to obtain the shape grammars from 2D images accurately and efficiently. Although enjoying the merits of promising results on the semantic parsing, deep learning methods cannot directly make use of the architectural rules, which play an important role for man-made structures. In this paper, we present a novel translational symmetry-based approach to improving the deep neural networks. Our method employs deep learning models as the base parser, and a module taking advantage of translational symmetry is used to refine the initial parsing results. In contrast to conventional semantic segmentation or bounding box prediction, we propose a novel scheme to fuse segmentation with anchor-free detection in a single stage network, which enables the efficient training and better convergence. After parsing the facades into shape grammars, we employ an off-the-shelf rendering engine like Blender to reconstruct the realistic high-quality 3D models using procedural modeling. We conduct experiments on three public datasets, where our proposed approach outperforms the state-of-the-art methods. In addition, we have illustrated the 3D building models built from 2D facade images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge