Transfer-Learning-Aware Neuro-Evolution for Diseases Detection in Chest X-Ray Images

Paper and Code

Apr 15, 2020

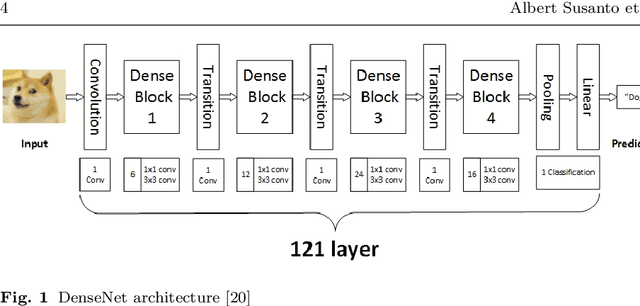

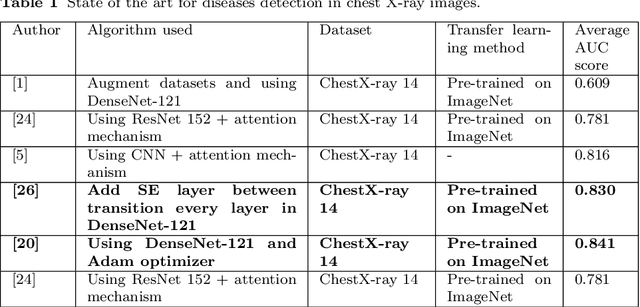

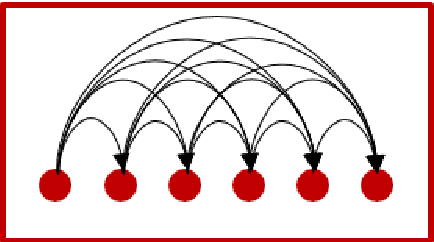

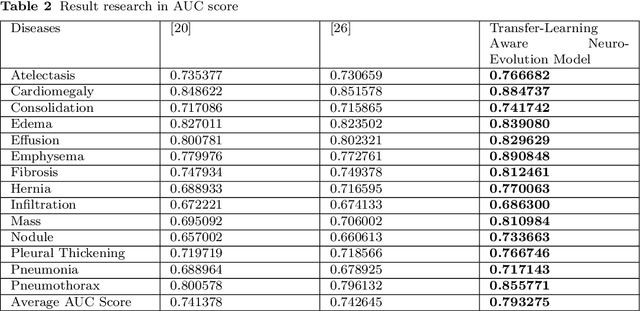

The neural network needs excessive costs of time because of the complexity of architecture when trained on images. Transfer learning and fine-tuning can help improve time and cost efficiency when training a neural network. Yet, Transfer learning and fine-tuning needs a lot of experiment to try with. Therefore, a method to find the best architecture for transfer learning and fine-tuning is needed. To overcome this problem, neuro-evolution using a genetic algorithm can be used to find the best architecture for transfer learning. To check the performance of this study, dataset ChestX-Ray 14 and DenseNet-121 as a base neural network model are used. This study used the AUC score, differences in execution time for training, and McNemar's test to the significance test. In terms of result, this study got a 5% difference in the AUC score, 3 % faster in terms of execution time, and significance in most of the disease detection. Finally, this study gives a concrete summary of how neuro-evolution transfer learning can help in terms of transfer learning and fine-tuning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge