Transfer from Multiple Linear Predictive State Representations (PSR)

Paper and Code

Feb 07, 2017

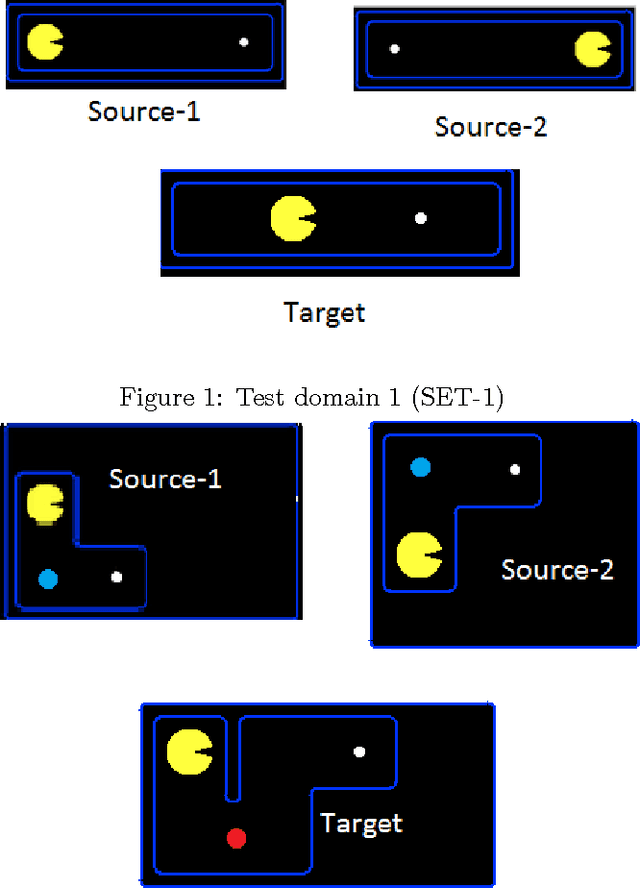

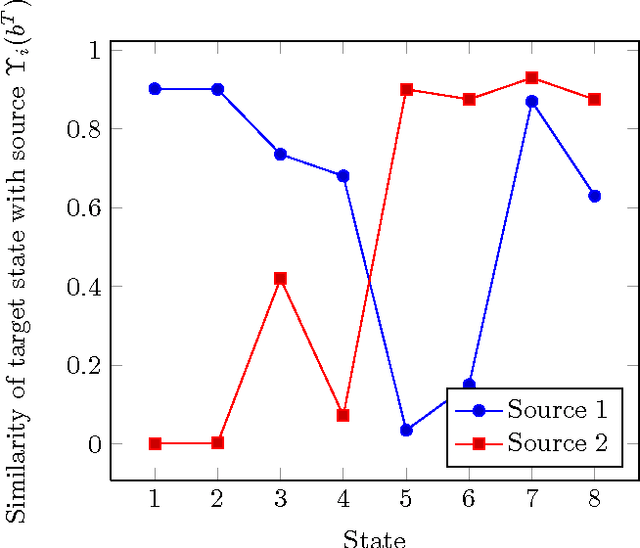

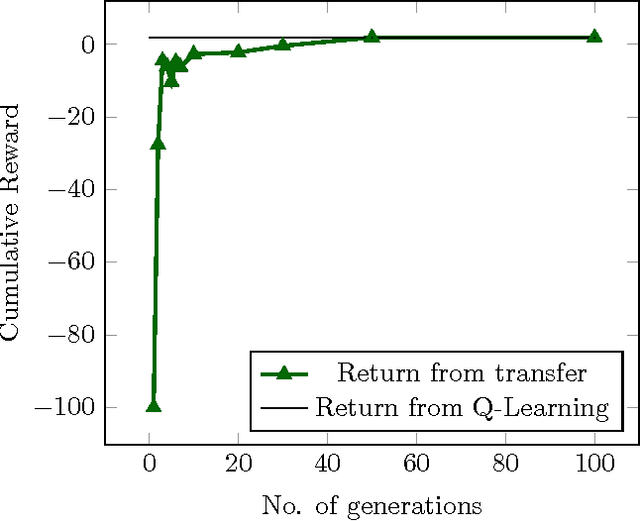

In this paper, we tackle the problem of transferring policy from multiple partially observable source environments to a partially observable target environment modeled as predictive state representation. This is an entirely new approach with no previous work, other than the case of transfer in fully observable domains. We develop algorithms to successfully achieve policy transfer when we have the model of both the source and target tasks and discuss in detail their performance and shortcomings. These algorithms could be a starting point for the field of transfer learning in partial observability.

* 8 pages, 3 algorithms, 3 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge