Training Dynamical Binary Neural Networks with Equilibrium Propagation

Paper and Code

Mar 16, 2021

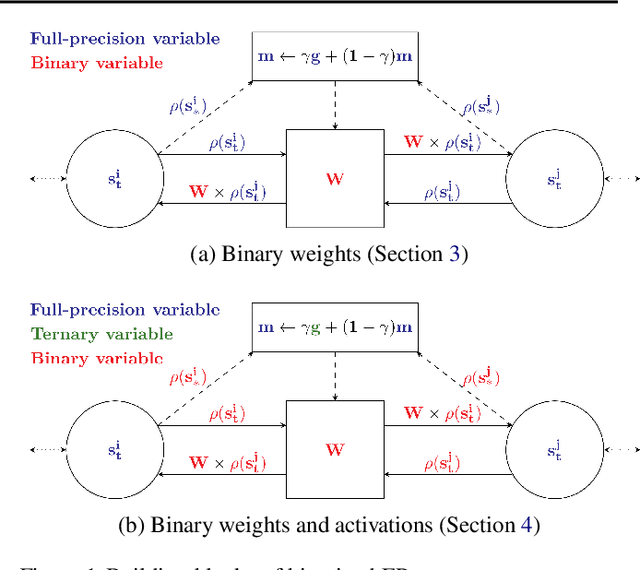

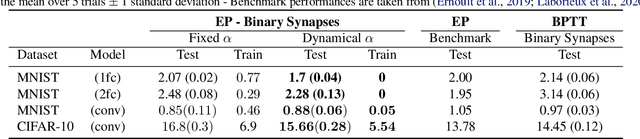

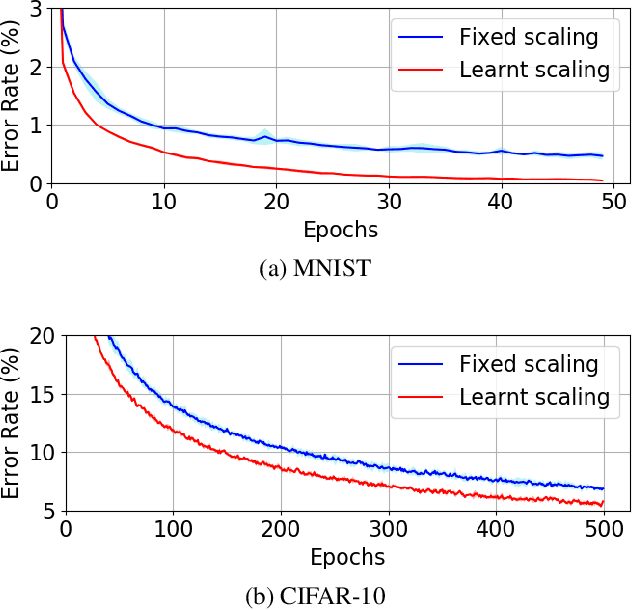

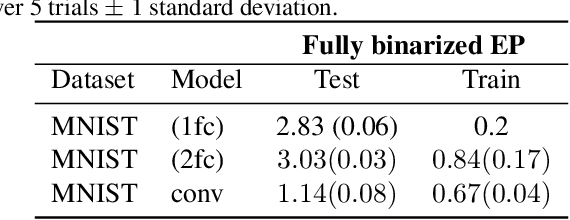

Equilibrium Propagation (EP) is an algorithm intrinsically adapted to the training of physical networks, in particular thanks to the local updates of weights given by the internal dynamics of the system. However, the construction of such a hardware requires to make the algorithm compatible with the existing neuromorphic CMOS technology, which generally exploits digital communication between neurons and offers a limited amount of local memory. In this work, we demonstrate that EP can train dynamical networks with binary activations and weights. We first train systems with binary weights and full-precision activations, achieving an accuracy equivalent to that of full-precision models trained by standard EP on MNIST, and losing only 1.9% accuracy on CIFAR-10 with equal architecture. We then extend our method to the training of models with binary activations and weights on MNIST, achieving an accuracy within 1% of the full-precision reference for fully connected architectures and reaching the full-precision reference accuracy for the convolutional architecture. Our extension of EP training to binary networks is consistent with the requirements of today's dynamic, brain-inspired hardware platforms and paves the way for very low-power end-to-end learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge