Traceability of Deep Neural Networks

Paper and Code

Dec 17, 2018

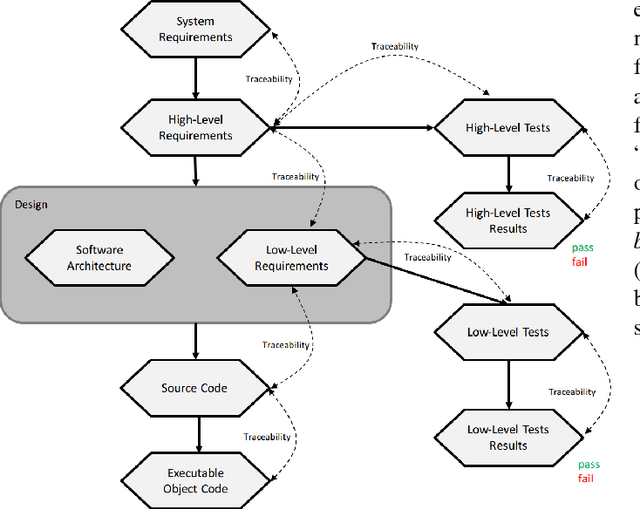

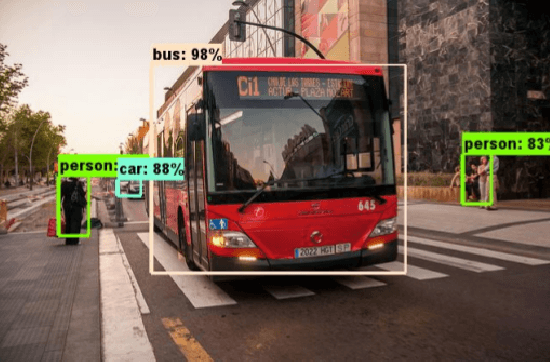

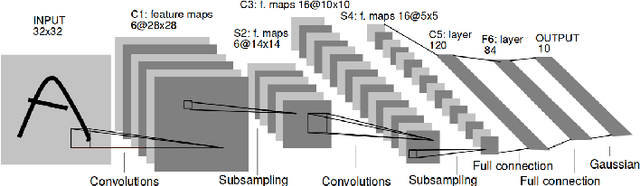

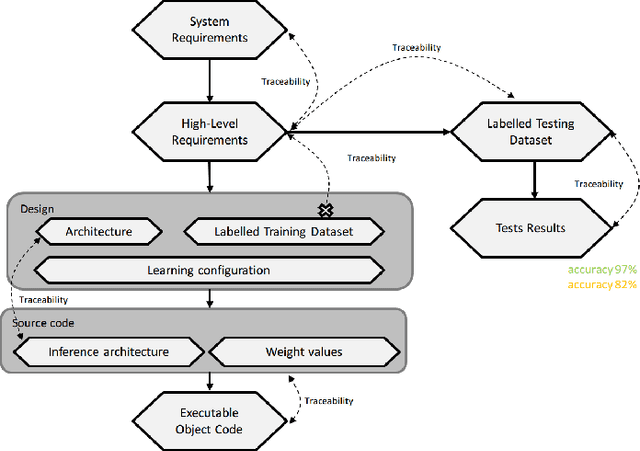

[Context.] The success of deep learning makes its usage more and more tempting in safety-critical applications. However such applications have historical standards (e.g., DO178, ISO26262) which typically do not envision the usage of machine learning. We focus in particular on \emph{requirements traceability} of software artifacts, i.e., code modules, functions, or statements (depending on the desired granularity). [Problem.] Both code and requirements are a problem when dealing with deep neural networks: code constituting the network is not comparable to classical code; furthermore, requirements for applications where neural networks are required are typically very hard to specify: even though high-level requirements can be defined, it is very hard to make such requirements concrete enough, that one can qualify them of low-level requirements. An additional problem is that deep learning is in practice very much based on trial-and-error, which makes the final result hard to explain without the previous iterations. [Proposed solution.] We investigate which artifacts could play a similar role to code or low-level requirements in neural network development and propose various traces which one could possibly consider as a replacement for classical notions. We also propose a form of traceability (and new artifacts) in order to deal with the particular trial-and-error development process for deep learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge