Towards optimal nonlinearities for sparse recovery using higher-order statistics

Paper and Code

Sep 05, 2016

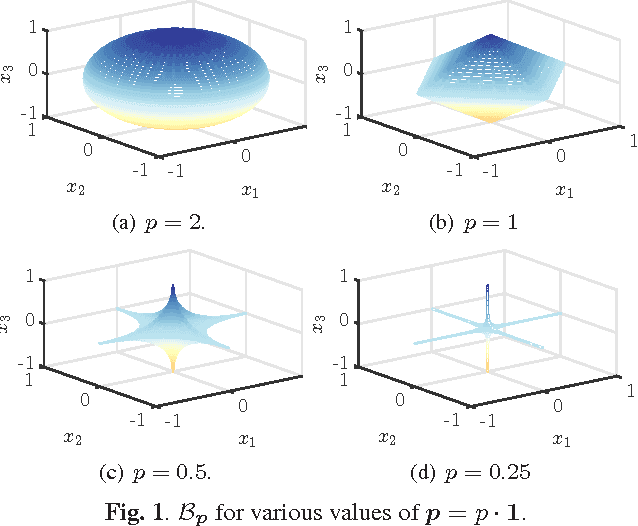

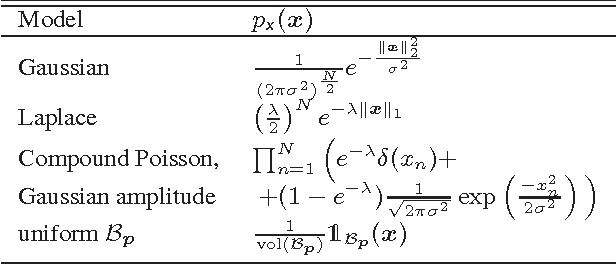

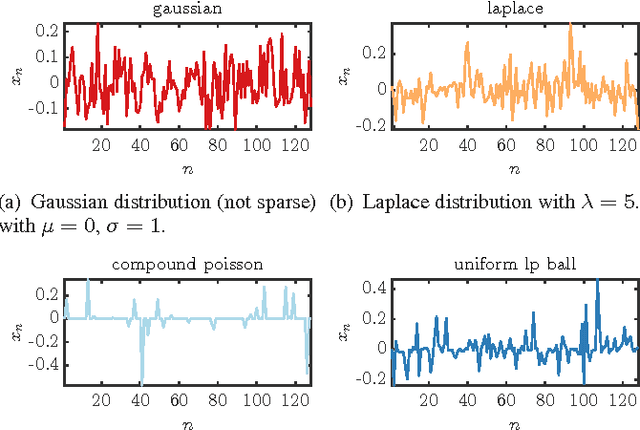

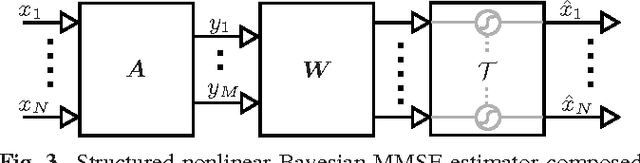

We consider machine learning techniques to develop low-latency approximate solutions to a class of inverse problems. More precisely, we use a probabilistic approach for the problem of recovering sparse stochastic signals that are members of the $\ell_p$-balls. In this context, we analyze the Bayesian mean-square-error (MSE) for two types of estimators: (i) a linear estimator and (ii) a structured estimator composed of a linear operator followed by a Cartesian product of univariate nonlinear mappings. By construction, the complexity of the proposed nonlinear estimator is comparable to that of its linear counterpart since the nonlinear mapping can be implemented efficiently in hardware by means of look-up tables (LUTs). The proposed structure lends itself to neural networks and iterative shrinkage/thresholding-type algorithms restricted to a single iterate (e.g. due to imposed hardware or latency constraints). By resorting to an alternating minimization technique, we obtain a sequence of optimized linear operators and nonlinear mappings that converge in the MSE objective. The result is attractive for real-time applications where general iterative and convex optimization methods are infeasible.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge