Towards naturalistic human neuroscience and neuroengineering: behavior mining in long-term video and neural recordings

Paper and Code

Jan 23, 2020

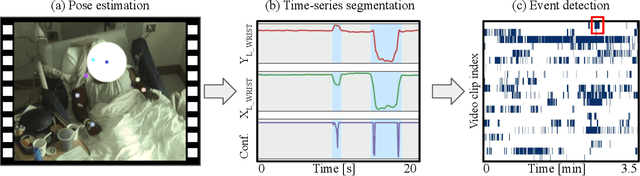

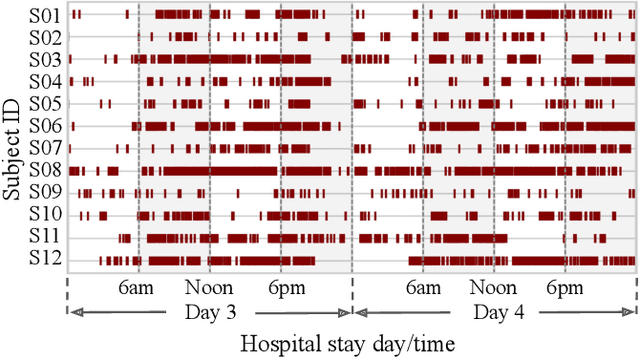

Recent advances in brain recording technology and artificial intelligence are propelling a new paradigm in neuroscience beyond the traditional controlled experiment. Naturalistic neuroscience studies neural computations associated with spontaneous behaviors performed in unconstrained settings. Analyzing such unstructured data lacking a priori experimental design remains a significant challenge, especially when the data is multi-modal and long-term. Here we describe an automated approach for analyzing large ($\approx$250 GB/subject) datasets of simultaneously recorded human electrocorticography (ECoG) and naturalistic behavior video data for 12 subjects. Our pipeline discovers and annotates thousands of instances of human upper-limb movement events in long-term (7--9 day) naturalistic behavior data using a combination of computer vision, discrete latent-variable modeling, and string pattern-matching. Analysis of the simultaneously recorded brain data uncovers neural signatures of movement that corroborate prior findings from traditional controlled experiments. We also prototype a decoder for a movement initiation detection task to demonstrate the efficacy of our pipeline as a source of training data for brain-computer interfacing applications. We plan to publish our curated dataset, which captures naturalistic neural and behavioral variability at a scale not previously available. We believe this data will enable further research on models of neural function and decoding that incorporate such naturalistic variability and perform more robustly in real-world settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge