Towards Listening to 10 People Simultaneously: An Efficient Permutation Invariant Training of Audio Source Separation Using Sinkhorn's Algorithm

Paper and Code

Oct 22, 2020

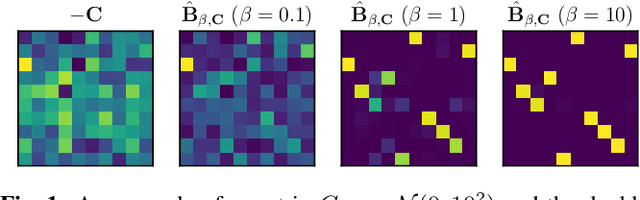

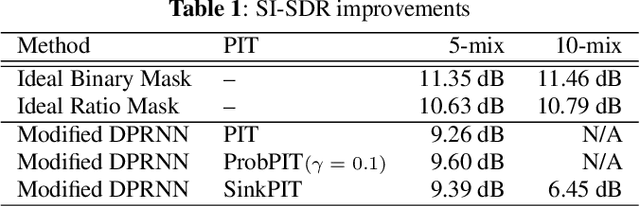

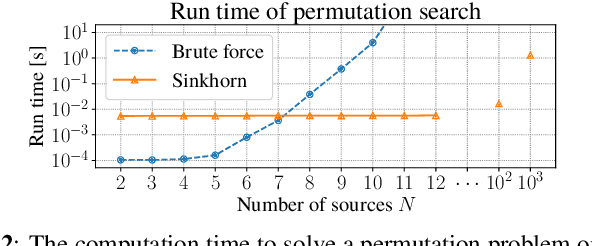

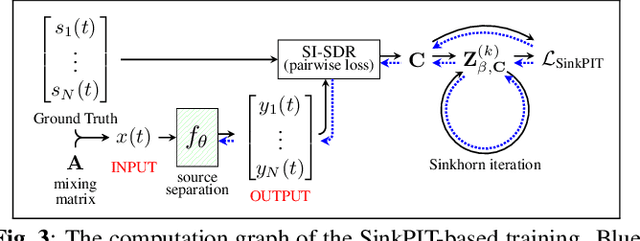

In neural network-based monaural speech separation techniques, it has been recently common to evaluate the loss using the permutation invariant training (PIT) loss. However, the ordinary PIT requires to try all $N!$ permutations between $N$ ground truths and $N$ estimates. Since the factorial complexity explodes very rapidly as $N$ increases, a PIT-based training works only when the number of source signals is small, such as $N = 2$ or $3$. To overcome this limitation, this paper proposes a SinkPIT, a novel variant of the PIT losses, which is much more efficient than the ordinary PIT loss when $N$ is large. The SinkPIT is based on Sinkhorn's matrix balancing algorithm, which efficiently finds a doubly stochastic matrix which approximates the best permutation in a differentiable manner. The author conducted an experiment to train a neural network model to decompose a single-channel mixture into 10 sources using the SinkPIT, and obtained promising results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge