Towards Energy-Aware Federated Learning on Battery-Powered Clients

Paper and Code

Aug 25, 2022

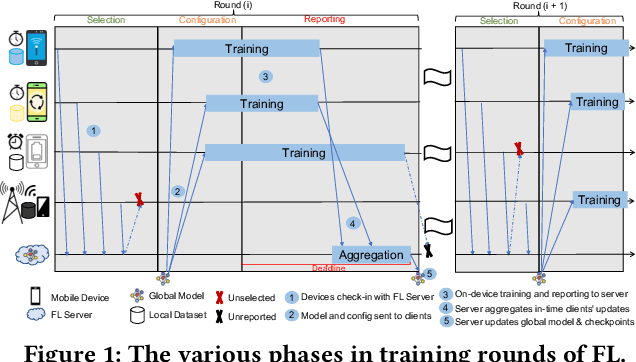

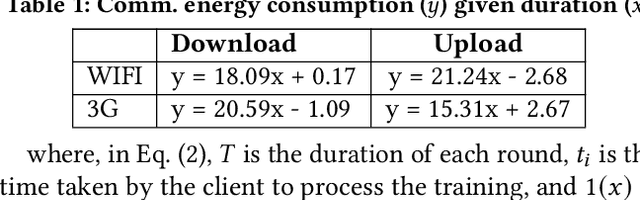

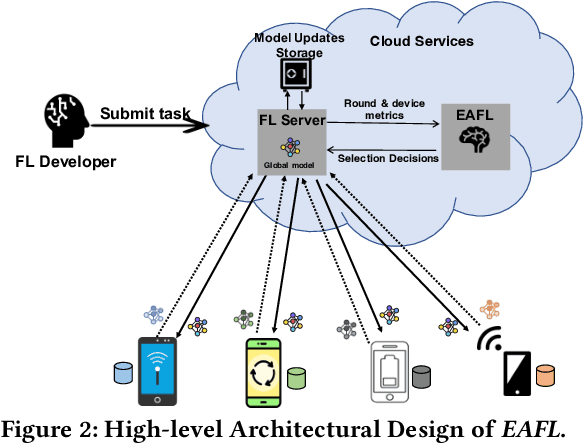

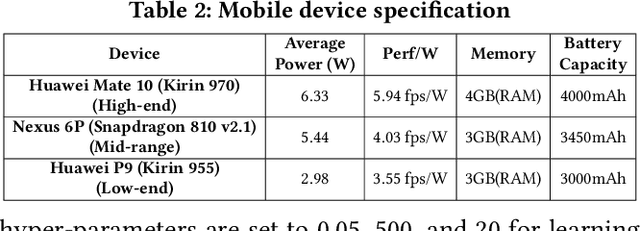

Federated learning (FL) is a newly emerged branch of AI that facilitates edge devices to collaboratively train a global machine learning model without centralizing data and with privacy by default. However, despite the remarkable advancement, this paradigm comes with various challenges. Specifically, in large-scale deployments, client heterogeneity is the norm which impacts training quality such as accuracy, fairness, and time. Moreover, energy consumption across these battery-constrained devices is largely unexplored and a limitation for wide-adoption of FL. To address this issue, we develop EAFL, an energy-aware FL selection method that considers energy consumption to maximize the participation of heterogeneous target devices. EAFL is a power-aware training algorithm that cherry-picks clients with higher battery levels in conjunction with its ability to maximize the system efficiency. Our design jointly minimizes the time-to-accuracy and maximizes the remaining on-device battery levels. EAFLimproves the testing model accuracy by up to 85\% and decreases the drop-out of clients by up to 2.45$\times$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge