Towards Action Model Learning for Player Modeling

Paper and Code

Mar 09, 2021

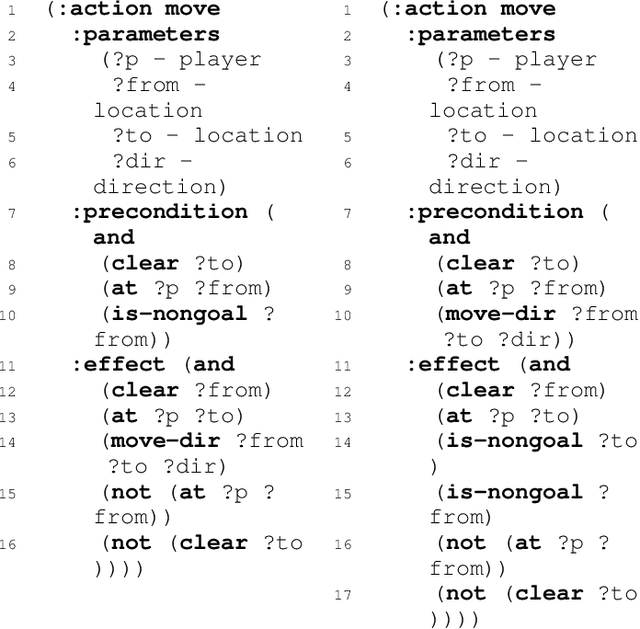

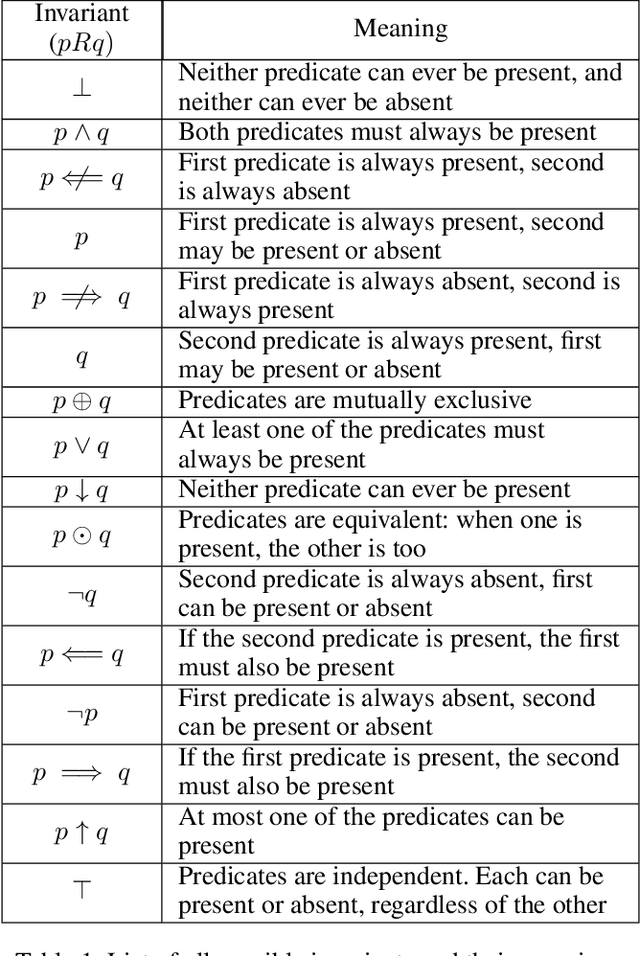

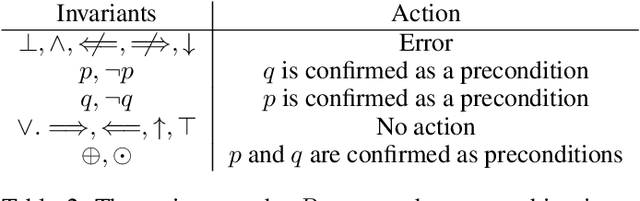

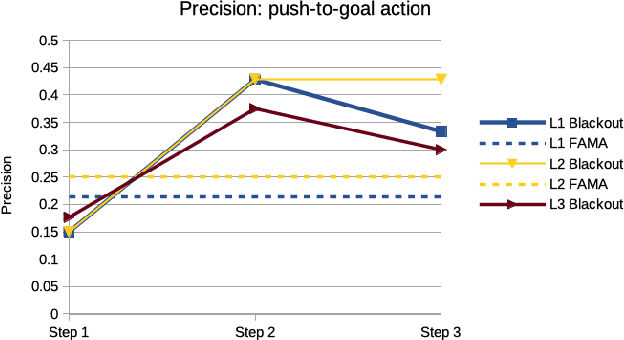

Player modeling attempts to create a computational model which accurately approximates a player's behavior in a game. Most player modeling techniques rely on domain knowledge and are not transferable across games. Additionally, player models do not currently yield any explanatory insight about a player's cognitive processes, such as the creation and refinement of mental models. In this paper, we present our findings with using action model learning (AML), in which an action model is learned given data in the form of a play trace, to learn a player model in a domain-agnostic manner. We demonstrate the utility of this model by introducing a technique to quantitatively estimate how well a player understands the mechanics of a game. We evaluate an existing AML algorithm (FAMA) for player modeling and develop a novel algorithm called Blackout that is inspired by player cognition. We compare Blackout with FAMA using the puzzle game Sokoban and show that Blackout generates better player models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge