Towards a Sustainable Internet-of-Underwater-Things based on AUVs, SWIPT, and Reinforcement Learning

Paper and Code

Feb 21, 2023

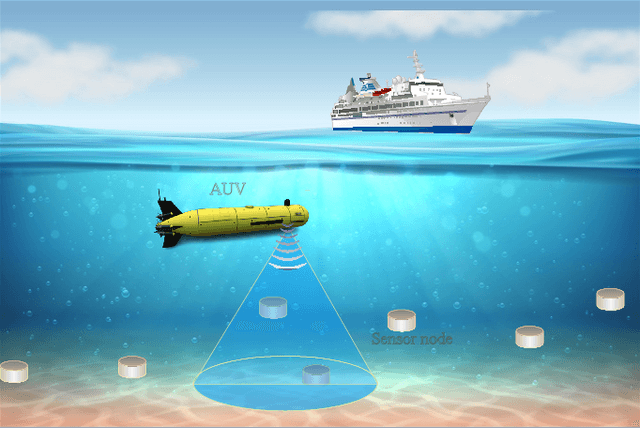

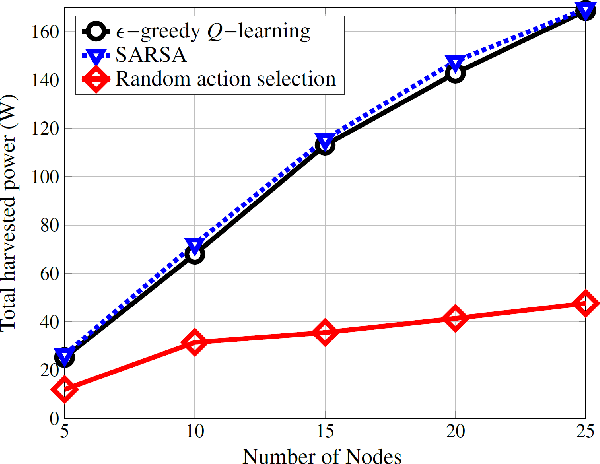

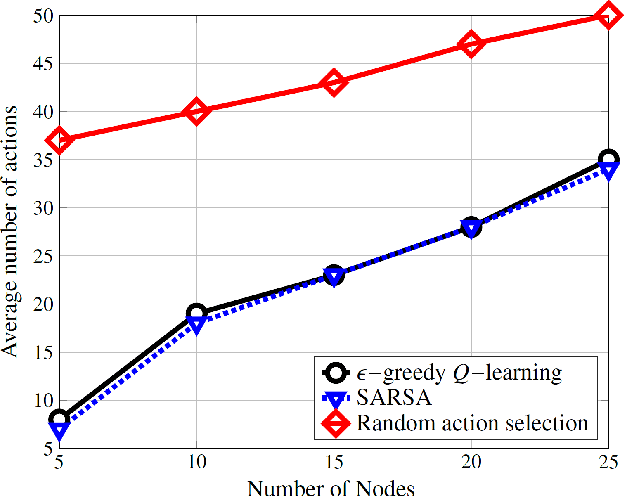

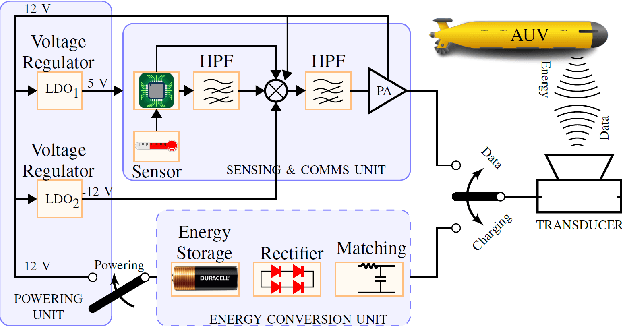

Life on earth depends on healthy oceans, which supply a large percentage of the planet's oxygen, food, and energy. However, the oceans are under threat from climate change, which is devastating the marine ecosystem and the economic and social systems that depend on it. The Internet-of-underwater-things (IoUTs), a global interconnection of underwater objects, enables round-the-clock monitoring of the oceans. It provides high-resolution data for training machine learning (ML) algorithms for rapidly evaluating potential climate change solutions and speeding up decision-making. The sensors in conventional IoUTs are battery-powered, which limits their lifetime, and constitutes environmental hazards when they die. In this paper, we propose a sustainable scheme to improve the throughput and lifetime of underwater networks, enabling them to potentially operate indefinitely. The scheme is based on simultaneous wireless information and power transfer (SWIPT) from an autonomous underwater vehicle (AUV) used for data collection. We model the problem of jointly maximising throughput and harvested power as a Markov Decision Process (MDP), and develop a model-free reinforcement learning (RL) algorithm as a solution. The model's reward function incentivises the AUV to find optimal trajectories that maximise throughput and power transfer to the underwater nodes while minimising energy consumption. To the best of our knowledge, this is the first attempt at using RL to ensure sustainable underwater networks via SWIPT. The scheme is implemented in an open 3D RL environment specifically developed in MATLAB for this study. The performance results show up 207% improvement in energy efficiency compared to those of a random trajectory scheme used as a baseline model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge