Towards A Measure Of General Machine Intelligence

Paper and Code

Sep 24, 2021

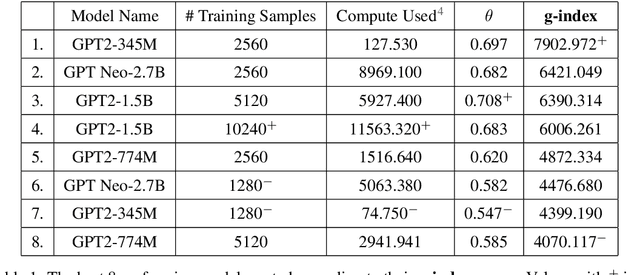

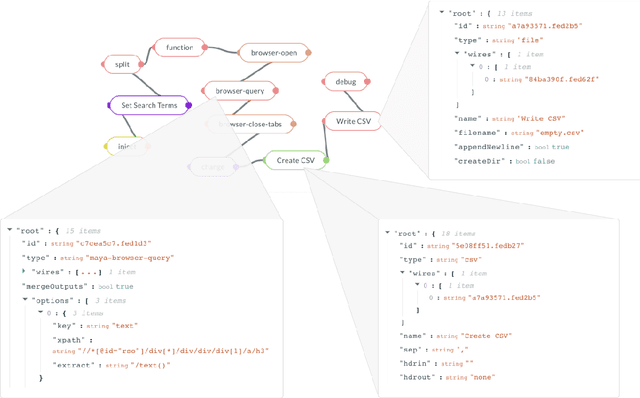

To build increasingly general-purpose artificial intelligence systems that can deal with unknown variables across unknown domains, we need benchmarks that measure precisely how well these systems perform on tasks they have never seen before. A prerequisite for this is a measure of a task's generalization difficulty, or how dissimilar it is from the system's prior knowledge and experience. If the skill of an intelligence system in a particular domain is defined as it's ability to consistently generate a set of instructions (or programs) to solve tasks in that domain, current benchmarks do not quantitatively measure the efficiency of acquiring new skills, making it possible to brute-force skill acquisition by training with unlimited amounts of data and compute power. With this in mind, we first propose a common language of instruction, i.e. a programming language that allows the expression of programs in the form of directed acyclic graphs across a wide variety of real-world domains and computing platforms. Using programs generated in this language, we demonstrate a match-based method to both score performance and calculate the generalization difficulty of any given set of tasks. We use these to define a numeric benchmark called the g-index to measure and compare the skill-acquisition efficiency of any intelligence system on a set of real-world tasks. Finally, we evaluate the suitability of some well-known models as general intelligence systems by calculating their g-index scores.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge