Toward Idealized Decision Theory

Paper and Code

Jul 07, 2015

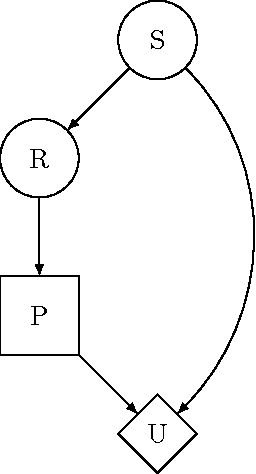

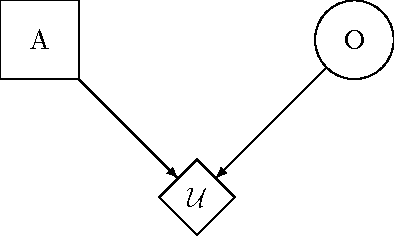

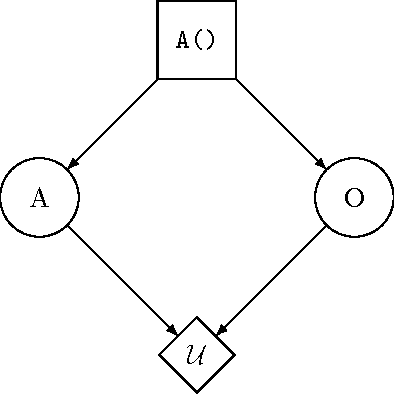

This paper motivates the study of decision theory as necessary for aligning smarter-than-human artificial systems with human interests. We discuss the shortcomings of two standard formulations of decision theory, and demonstrate that they cannot be used to describe an idealized decision procedure suitable for approximation by artificial systems. We then explore the notions of policy selection and logical counterfactuals, two recent insights into decision theory that point the way toward promising paths for future research.

* This is an extended version of a paper accepted to AGI-2015

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge