Tight Kernel Query Complexity of Kernel Ridge Regression and Kernel $k$-means Clustering

Paper and Code

May 15, 2019

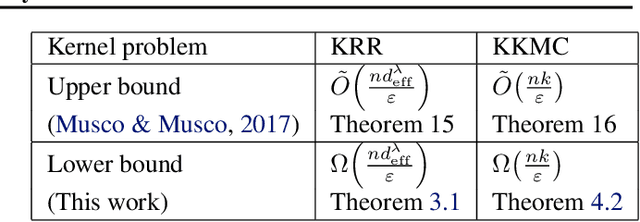

We present tight lower bounds on the number of kernel evaluations required to approximately solve kernel ridge regression (KRR) and kernel $k$-means clustering (KKMC) on $n$ input points. For KRR, our bound for relative error approximation to the minimizer of the objective function is $\Omega(nd_{\mathrm{eff}}^\lambda/\varepsilon)$ where $d_{\mathrm{eff}}^\lambda$ is the effective statistical dimension, which is tight up to a $\log(d_{\mathrm{eff}}^\lambda/\varepsilon)$ factor. For KKMC, our bound for finding a $k$-clustering achieving a relative error approximation of the objective function is $\Omega(nk/\varepsilon)$, which is tight up to a $\log(k/\varepsilon)$ factor. Our KRR result resolves a variant of an open question of El Alaoui and Mahoney, asking whether the effective statistical dimension is a lower bound on the sampling complexity or not. Furthermore, for the important practical case when the input is a mixture of Gaussians, we provide a KKMC algorithm which bypasses the above lower bound.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge