Theta-RBM: Unfactored Gated Restricted Boltzmann Machine for Rotation-Invariant Representations

Paper and Code

Jun 29, 2016

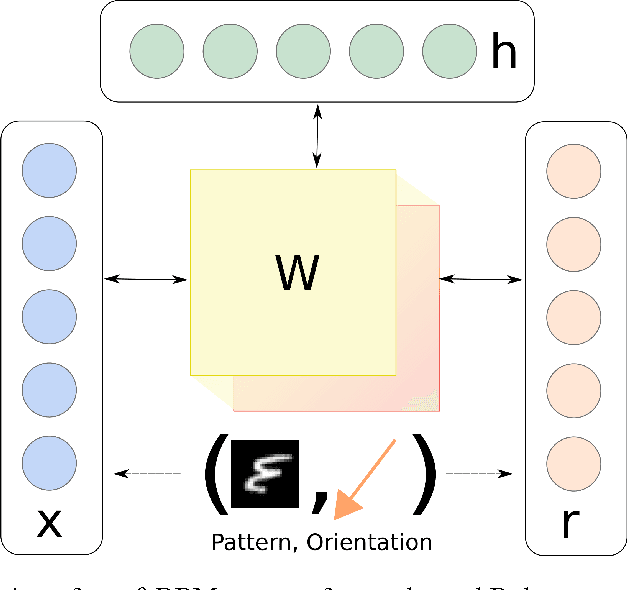

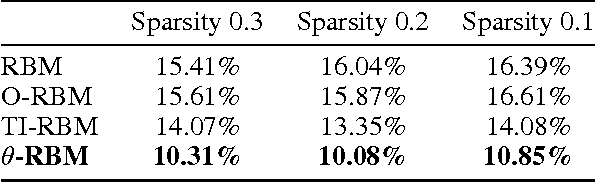

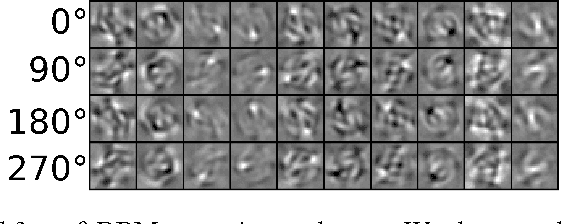

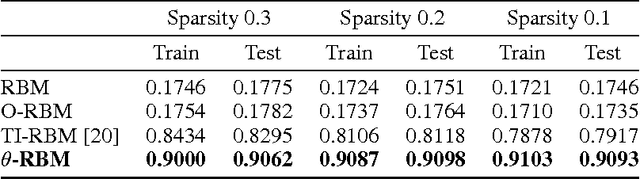

Learning invariant representations is a critical task in computer vision. In this paper, we propose the Theta-Restricted Boltzmann Machine ({\theta}-RBM in short), which builds upon the original RBM formulation and injects the notion of rotation-invariance during the learning procedure. In contrast to previous approaches, we do not transform the training set with all possible rotations. Instead, we rotate the gradient filters when they are computed during the Contrastive Divergence algorithm. We formulate our model as an unfactored gated Boltzmann machine, where another input layer is used to modulate the input visible layer to drive the optimisation procedure. Among our contributions is a mathematical proof that demonstrates that {\theta}-RBM is able to learn rotation-invariant features according to a recently proposed invariance measure. Our method reaches an invariance score of ~90% on mnist-rot dataset, which is the highest result compared with the baseline methods and the current state of the art in transformation-invariant feature learning in RBM. Using an SVM classifier, we also showed that our network learns discriminative features as well, obtaining ~10% of testing error.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge