The thermodynamic temperature of a rhythmic spiking network

Paper and Code

Sep 28, 2010

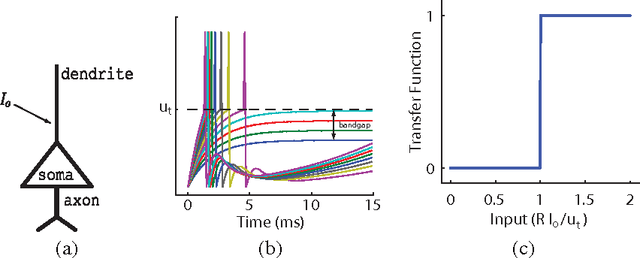

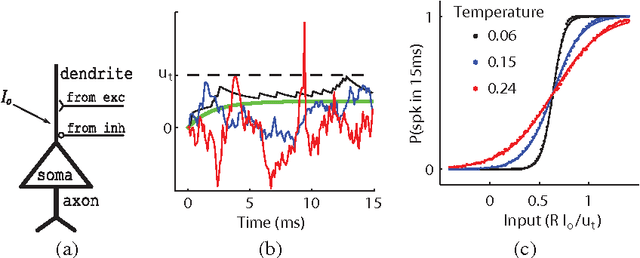

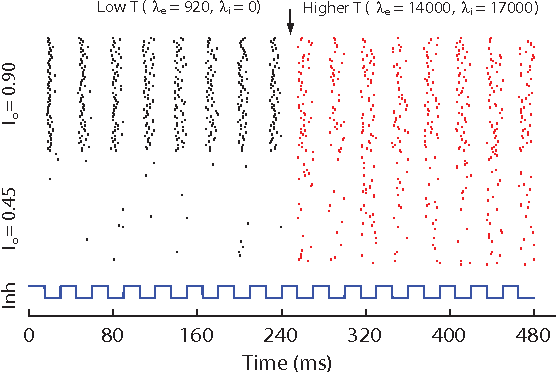

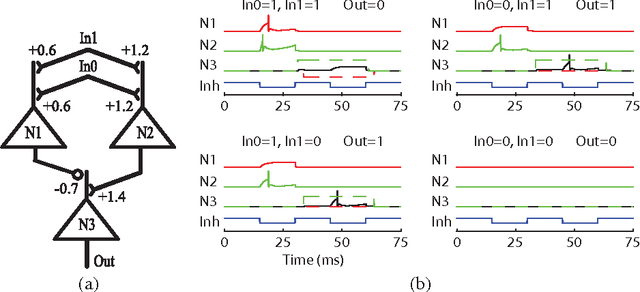

Artificial neural networks built from two-state neurons are powerful computational substrates, whose computational ability is well understood by analogy with statistical mechanics. In this work, we introduce similar analogies in the context of spiking neurons in a fixed time window, where excitatory and inhibitory inputs drawn from a Poisson distribution play the role of temperature. For single neurons with a "bandgap" between their inputs and the spike threshold, this temperature allows for stochastic spiking. By imposing a global inhibitory rhythm over the fixed time windows, we connect neurons into a network that exhibits synchronous, clock-like updating akin to neural networks. We implement a single-layer Boltzmann machine without learning to demonstrate our model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge