The structure of evolved representations across different substrates for artificial intelligence

Paper and Code

Apr 05, 2018

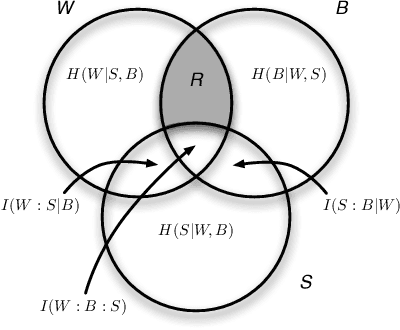

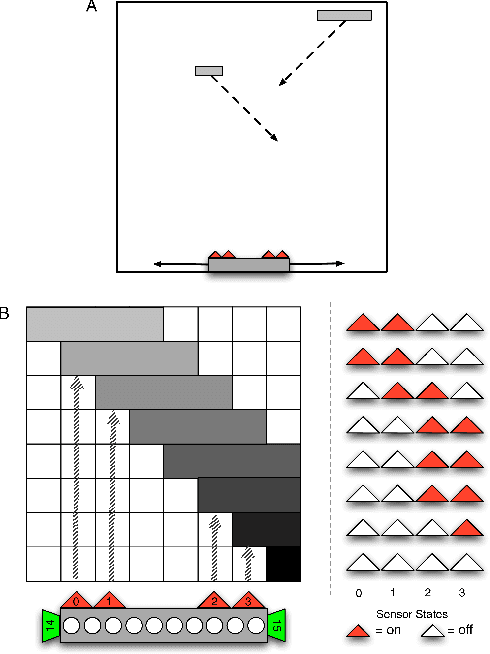

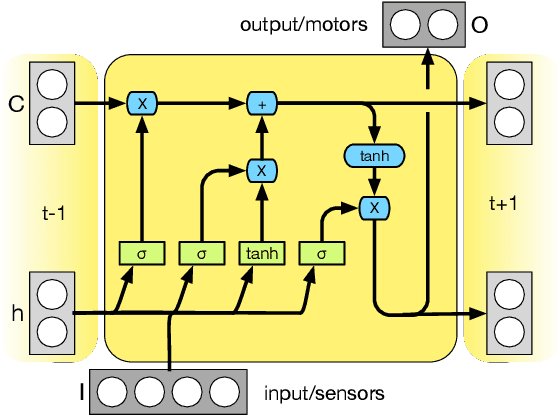

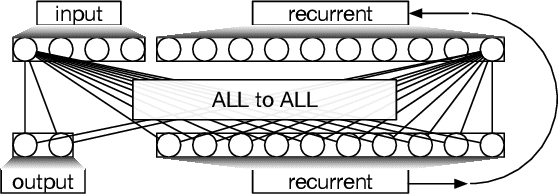

Artificial neural networks (ANNs), while exceptionally useful for classification, are vulnerable to misdirection. Small amounts of noise can significantly affect their ability to correctly complete a task. Instead of generalizing concepts, ANNs seem to focus on surface statistical regularities in a given task. Here we compare how recurrent artificial neural networks, long short-term memory units, and Markov Brains sense and remember their environments. We show that information in Markov Brains is localized and sparsely distributed, while the other neural network substrates "smear" information about the environment across all nodes, which makes them vulnerable to noise.

* 8 pages, 13 figures. Submitted to Artificial Life Conference (Tokyo,

2018)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge