The State of Knowledge Distillation for Classification

Paper and Code

Dec 20, 2019

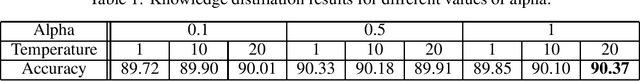

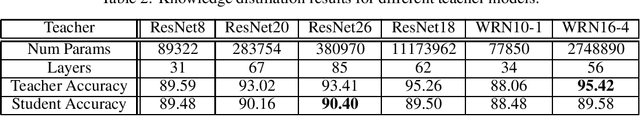

We survey various knowledge distillation (KD) strategies for simple classification tasks and implement a set of techniques that claim state-of-the-art accuracy. Our experiments using standardized model architectures, fixed compute budgets, and consistent training schedules indicate that many of these distillation results are hard to reproduce. This is especially apparent with methods using some form of feature distillation. Further examination reveals a lack of generalizability where these techniques may only succeed for specific architectures and training settings. We observe that appropriately tuned classical distillation in combination with a data augmentation training scheme gives an orthogonal improvement over other techniques. We validate this approach and open-source our code.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge