The role of grammar in transition-probabilities of subsequent words in English text

Paper and Code

Dec 28, 2018

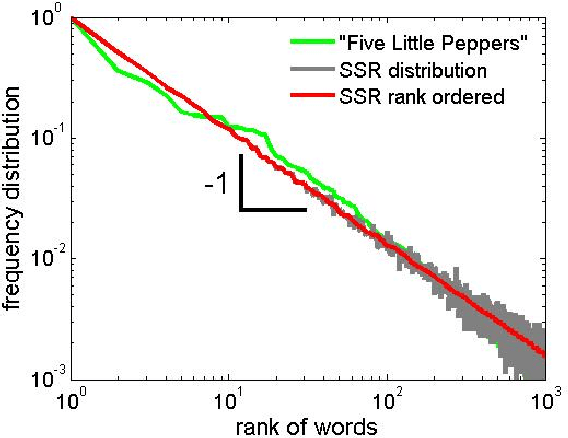

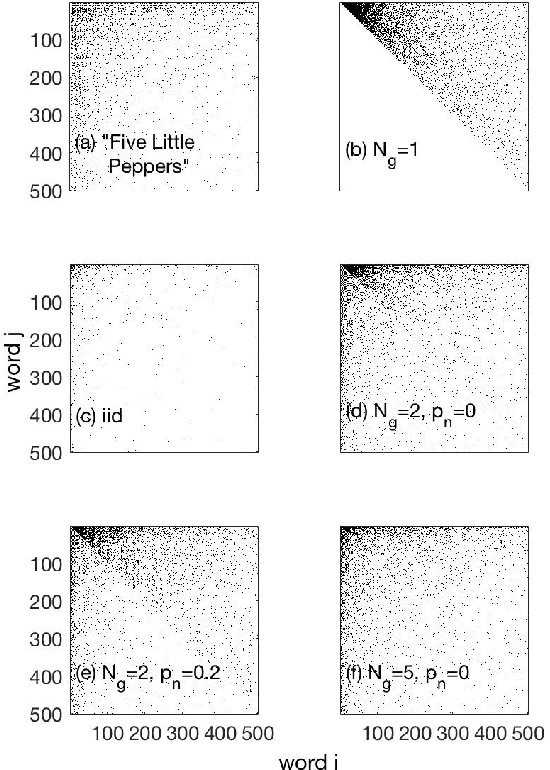

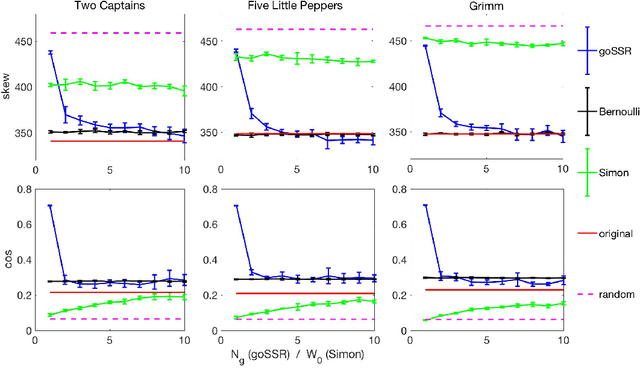

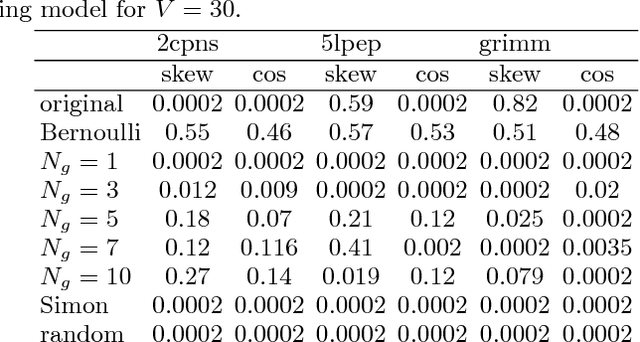

Sentence formation is a highly structured, history-dependent, and sample-space reducing (SSR) process. While the first word in a sentence can be chosen from the entire vocabulary, typically, the freedom of choosing subsequent words gets more and more constrained by grammar and context, as the sentence progresses. This sample-space reducing property offers a natural explanation of Zipf's law in word frequencies, however, it fails to capture the structure of the word-to-word transition probability matrices of English text. Here we adopt the view that grammatical constraints (such as subject--predicate--object) locally re-order the word order in sentences that are sampled with a SSR word generation process. We demonstrate that superimposing grammatical structure -- as a local word re-ordering (permutation) process -- on a sample-space reducing process is sufficient to explain both, word frequencies and word-to-word transition probabilities. We compare the quality of the grammatically ordered SSR model in reproducing several test statistics of real texts with other text generation models, such as the Bernoulli model, the Simon model, and the Monkey typewriting model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge