The Others: Naturally Isolating Out-of-Distribution Samples for Robust Open-Set Semi-Supervised Learning

Paper and Code

Apr 17, 2025

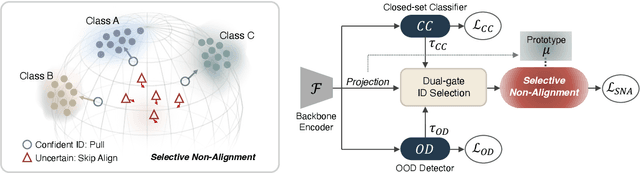

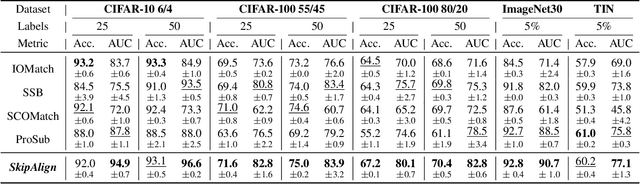

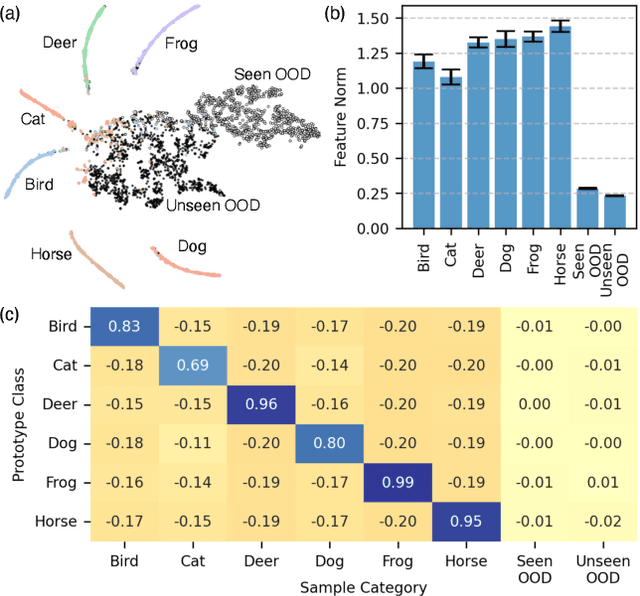

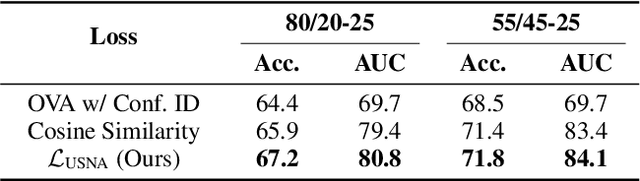

Open-Set Semi-Supervised Learning (OSSL) tackles the practical challenge of learning from unlabeled data that may include both in-distribution (ID) and unknown out-of-distribution (OOD) classes. However, existing OSSL methods form suboptimal feature spaces by either excluding OOD samples, interfering with them, or overtrusting their information during training. In this work, we introduce MagMatch, a novel framework that naturally isolates OOD samples through a prototype-based contrastive learning paradigm. Unlike conventional methods, MagMatch does not assign any prototypes to OOD samples; instead, it selectively aligns ID samples with class prototypes using an ID-Selective Magnetic (ISM) module, while allowing OOD samples - the "others" - to remain unaligned in the feature space. To support this process, we propose Selective Magnetic Alignment (SMA) loss for unlabeled data, which dynamically adjusts alignment based on sample confidence. Extensive experiments on diverse datasets demonstrate that MagMatch significantly outperforms existing methods in both closed-set classification accuracy and OOD detection AUROC, especially in generalizing to unseen OOD data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge