The Good, The Efficient and the Inductive Biases: Exploring Efficiency in Deep Learning Through the Use of Inductive Biases

Paper and Code

Nov 14, 2024

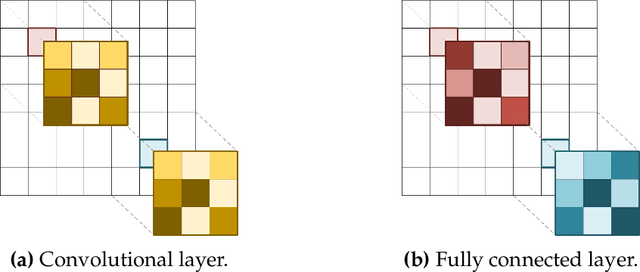

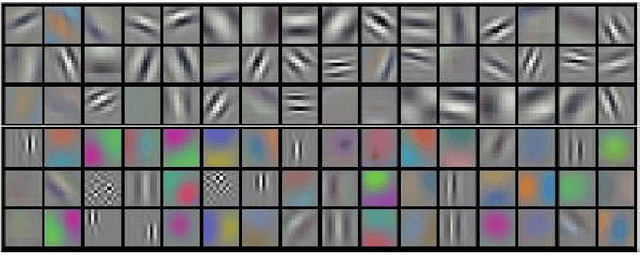

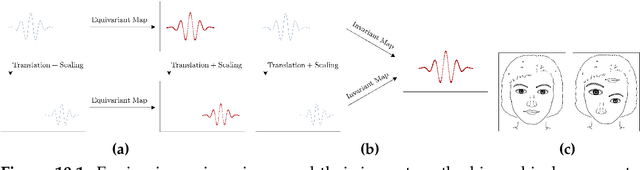

The emergence of Deep Learning has marked a profound shift in machine learning, driven by numerous breakthroughs achieved in recent years. However, as Deep Learning becomes increasingly present in everyday tools and applications, there is a growing need to address unresolved challenges related to its efficiency and sustainability. This dissertation delves into the role of inductive biases -- particularly, continuous modeling and symmetry preservation -- as strategies to enhance the efficiency of Deep Learning. It is structured in two main parts. The first part investigates continuous modeling as a tool to improve the efficiency of Deep Learning algorithms. Continuous modeling involves the idea of parameterizing neural operations in a continuous space. The research presented here demonstrates substantial benefits for the (i) computational efficiency -- in time and memory, (ii) the parameter efficiency, and (iii) design efficiency -- the complexity of designing neural architectures for new datasets and tasks. The second focuses on the role of symmetry preservation on Deep Learning efficiency. Symmetry preservation involves designing neural operations that align with the inherent symmetries of data. The research presented in this part highlights significant gains both in data and parameter efficiency through the use of symmetry preservation. However, it also acknowledges a resulting trade-off of increased computational costs. The dissertation concludes with a critical evaluation of these findings, openly discussing their limitations and proposing strategies to address them, informed by literature and the author insights. It ends by identifying promising future research avenues in the exploration of inductive biases for efficiency, and their wider implications for Deep Learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge