The Faults in Our Pi Stars: Security Issues and Open Challenges in Deep Reinforcement Learning

Paper and Code

Oct 23, 2018

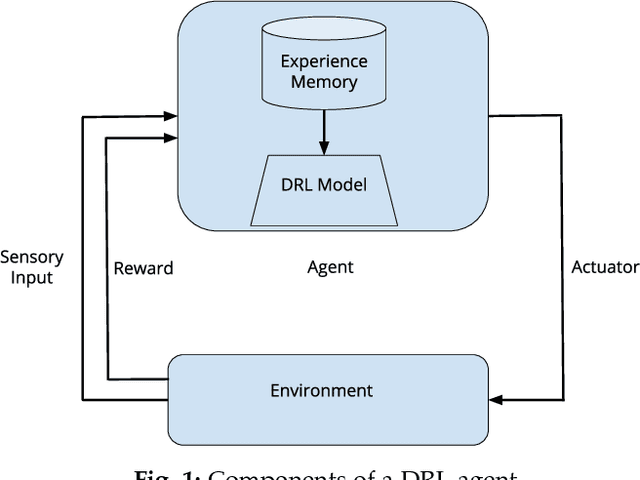

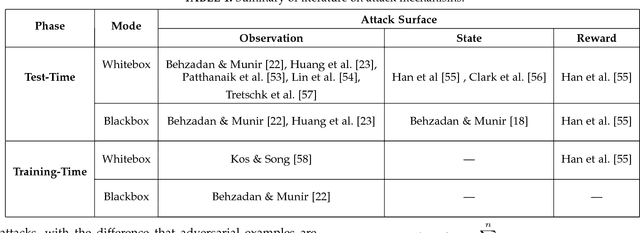

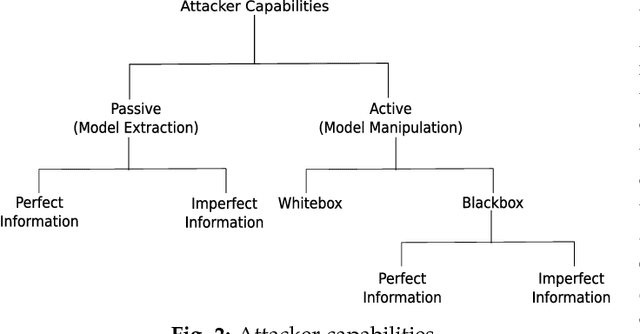

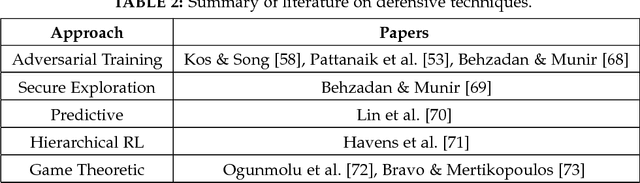

Since the inception of Deep Reinforcement Learning (DRL) algorithms, there has been a growing interest in both research and industrial communities in the promising potentials of this paradigm. The list of current and envisioned applications of deep RL ranges from autonomous navigation and robotics to control applications in the critical infrastructure, air traffic control, defense technologies, and cybersecurity. While the landscape of opportunities and the advantages of deep RL algorithms are justifiably vast, the security risks and issues in such algorithms remain largely unexplored. To facilitate and motivate further research on these critical challenges, this paper presents a foundational treatment of the security problem in DRL. We formulate the security requirements of DRL, and provide a high-level threat model through the classification and identification of vulnerabilities, attack vectors, and adversarial capabilities. Furthermore, we present a review of current literature on security of deep RL from both offensive and defensive perspectives. Lastly, we enumerate critical research venues and open problems in mitigation and prevention of intentional attacks against deep RL as a roadmap for further research in this area.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge