Text-Independent Speaker Verification Based on Deep Neural Networks and Segmental Dynamic Time Warping

Paper and Code

Jun 26, 2018

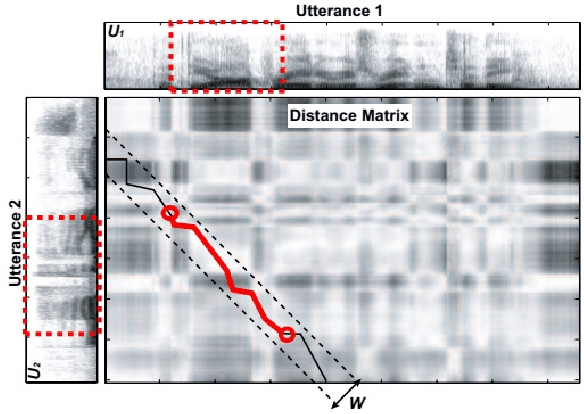

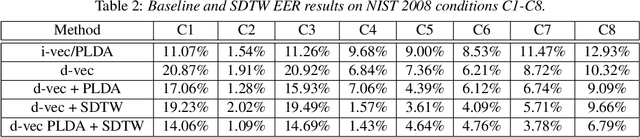

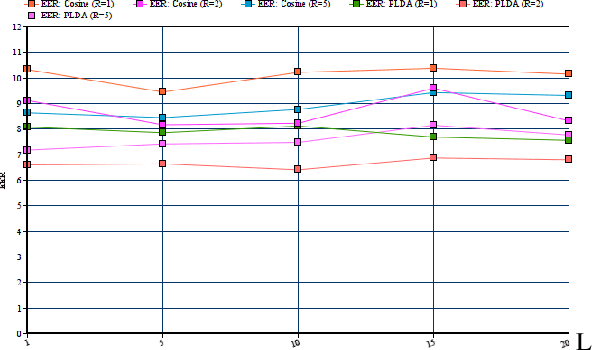

In this paper we present a new method for text-independent speaker verification that combines segmental dynamic time warping (SDTW) and the d-vector approach. The d-vectors, generated from a feed forward deep neural network trained to distinguish between speakers, are used as features to perform alignment and hence calculate the overall distance between the enrolment and test utterances.We present results on the NIST 2008 data set for speaker verification where the proposed method outperforms the conventional i-vector baseline with PLDA scores and outperforms d-vector approach with local distances based on cosine and PLDA scores. Also score combination with the i-vector/PLDA baseline leads to significant gains over both methods.

* Submitted to SLT 2018

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge