TexRel: a Green Family of Datasets for Emergent Communications on Relations

Paper and Code

May 26, 2021

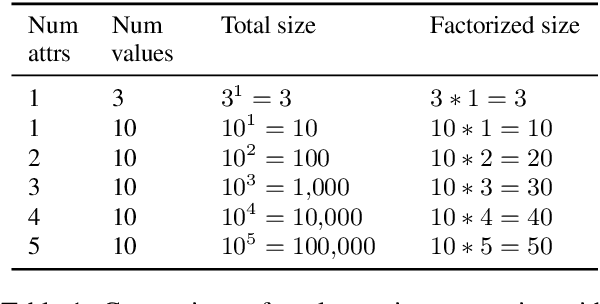

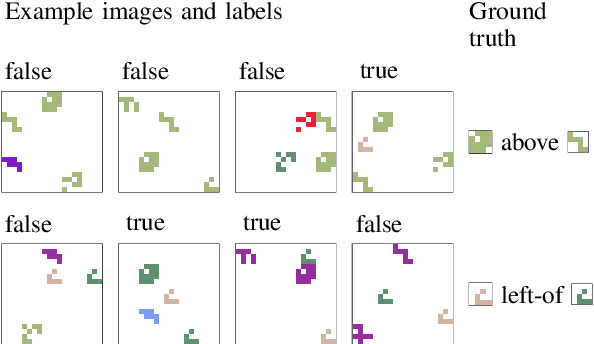

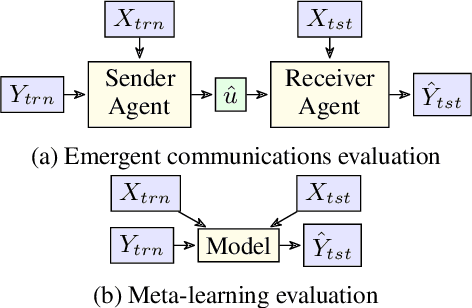

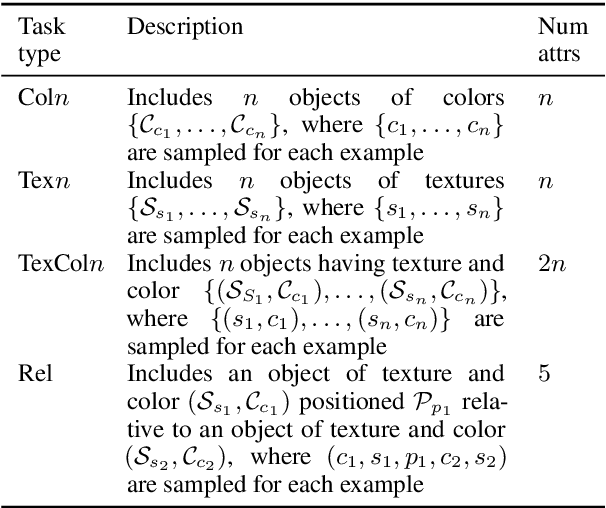

We propose a new dataset TexRel as a playground for the study of emergent communications, in particular for relations. By comparison with other relations datasets, TexRel provides rapid training and experimentation, whilst being sufficiently large to avoid overfitting in the context of emergent communications. By comparison with using symbolic inputs, TexRel provides a more realistic alternative whilst remaining efficient and fast to learn. We compare the performance of TexRel with a related relations dataset Shapeworld. We provide baseline performance results on TexRel for sender architectures, receiver architectures and end-to-end architectures. We examine the effect of multitask learning in the context of shapes, colors and relations on accuracy, topological similarity and clustering precision. We investigate whether increasing the size of the latent meaning space improves metrics of compositionality. We carry out a case-study on using TexRel to reproduce the results of an experiment in a recent paper that used symbolic inputs, but using our own non-symbolic inputs, from TexRel, instead.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge