Task-Oriented Mulsemedia Communication using Unified Perceiver and Conformal Prediction in 6G Metaverse Systems

Paper and Code

May 14, 2024

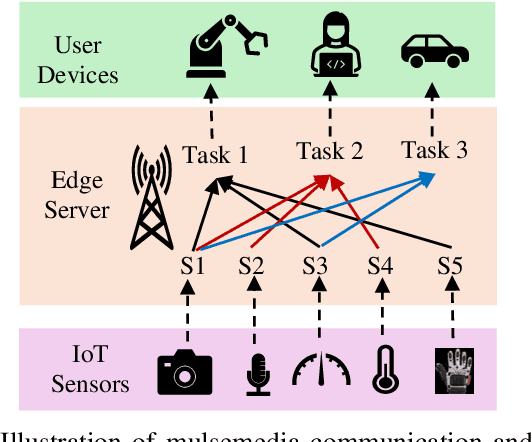

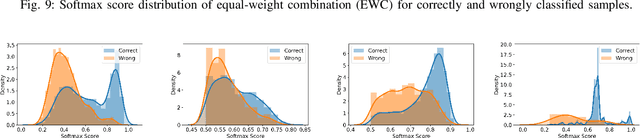

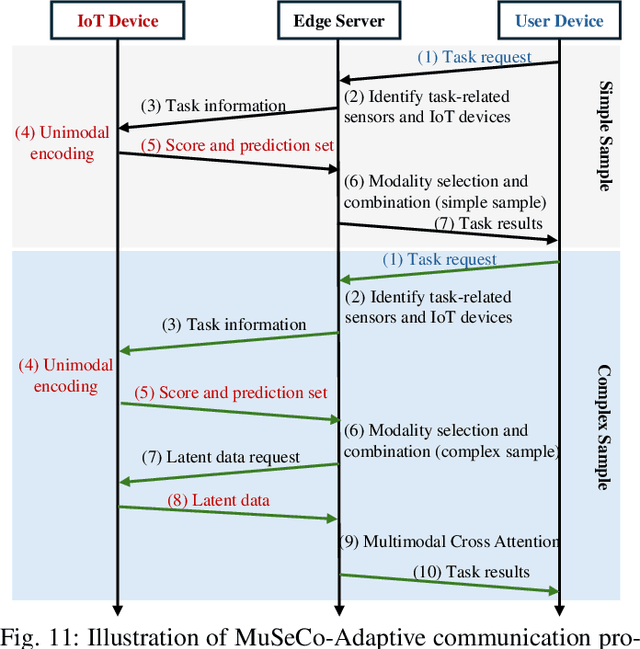

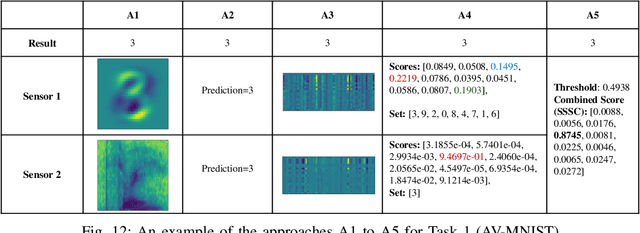

The growing prominence of extended reality (XR), holographic-type communications, and metaverse demands truly immersive user experiences by using many sensory modalities, including sight, hearing, touch, smell, taste, etc. Additionally, the widespread deployment of sensors in areas such as agriculture, manufacturing, and smart homes is generating a diverse array of sensory data. A new media format known as multisensory media (mulsemedia) has emerged, which incorporates a wide range of sensory modalities beyond the traditional visual and auditory media. 6G wireless systems are envisioned to support the internet of senses, making it crucial to explore effective data fusion and communication strategies for mulsemedia. In this paper, we introduce a task-oriented multi-task mulsemedia communication system named MuSeCo, which is developed using unified Perceiver models and Conformal Prediction. This unified model can accept any sensory input and efficiently extract latent semantic features, making it adaptable for deployment across various Artificial Intelligence of Things (AIoT) devices. Conformal Prediction is employed for modality selection and combination, enhancing task accuracy while minimizing data communication overhead. The model has been trained using six sensory modalities across four classification tasks. Simulations and experiments demonstrate that MuSeCo can effectively select and combine sensory modalities, significantly reduce end-to-end communication latency and energy consumption, and maintain high accuracy in communication-constrained systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge