Task-Driven Data Augmentation for Vision-Based Robotic Control

Paper and Code

Apr 26, 2022

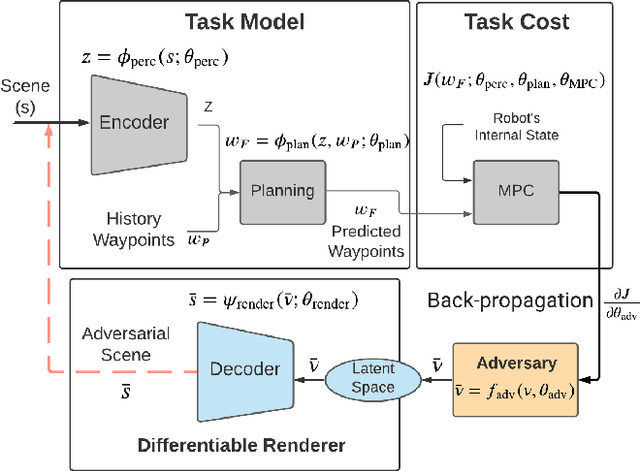

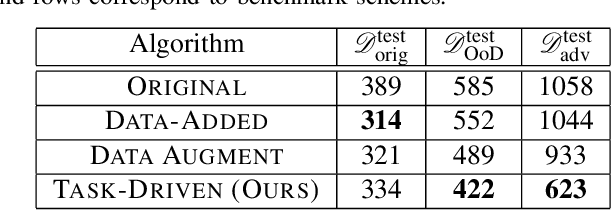

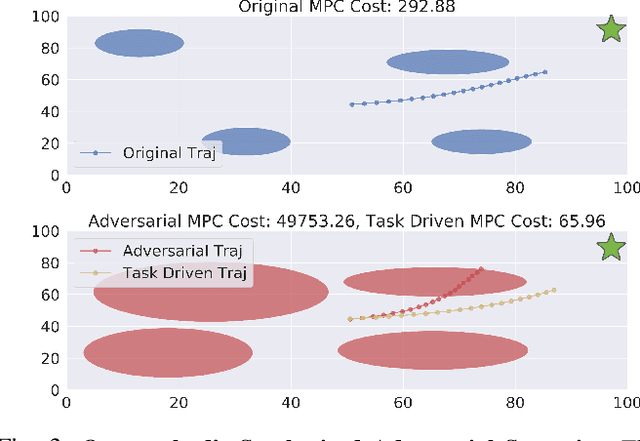

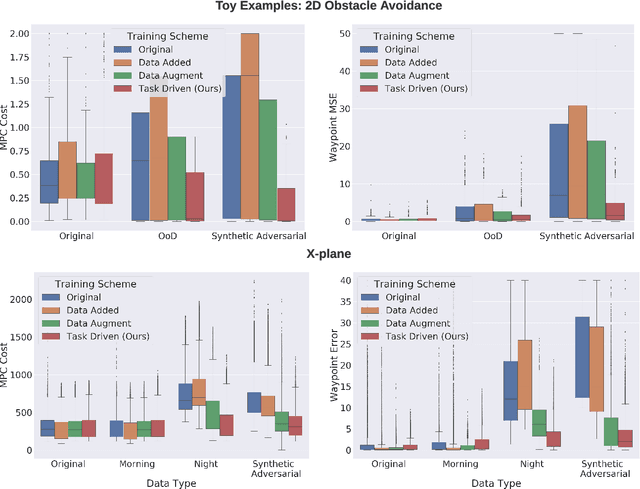

Today's robots often interface data-driven perception and planning models with classical model-based controllers. For example, drones often use computer vision models to estimate navigation waypoints that are tracked by model predictive control (MPC). Often, such learned perception/planning models produce erroneous waypoint predictions on out-of-distribution (OoD) or even adversarial visual inputs, which increase control cost. However, today's methods to train robust perception models are largely task-agnostic - they augment a dataset using random image transformations or adversarial examples targeted at the vision model in isolation. As such, they often introduce pixel perturbations that are ultimately benign for control, while missing those that are most adversarial. In contrast to prior work that synthesizes adversarial examples for single-step vision tasks, our key contribution is to efficiently synthesize adversarial scenarios for multi-step, model-based control. To do so, we leverage differentiable MPC methods to calculate the sensitivity of a model-based controller to errors in state estimation, which in turn guides how we synthesize adversarial inputs. We show that re-training vision models on these adversarial datasets improves control performance on OoD test scenarios by up to 28.2% compared to standard task-agnostic data augmentation. Our system is tested on examples of robotic navigation and vision-based control of an autonomous air vehicle.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge