Talking Back -- human input and explanations to interactive AI systems

Paper and Code

Mar 06, 2025

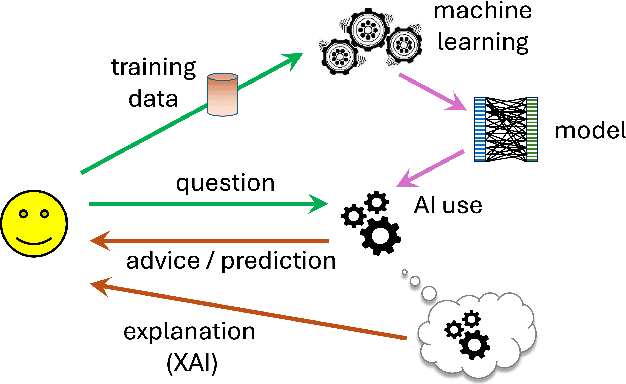

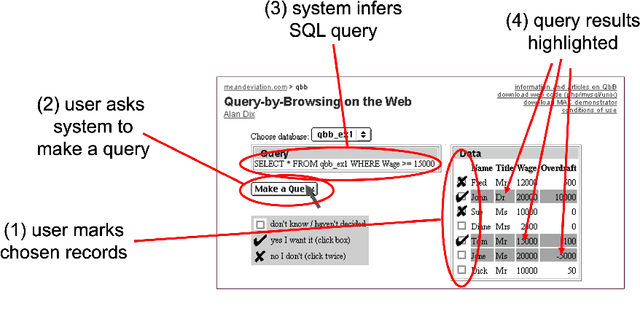

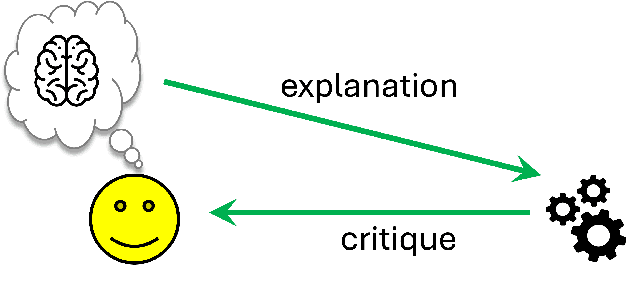

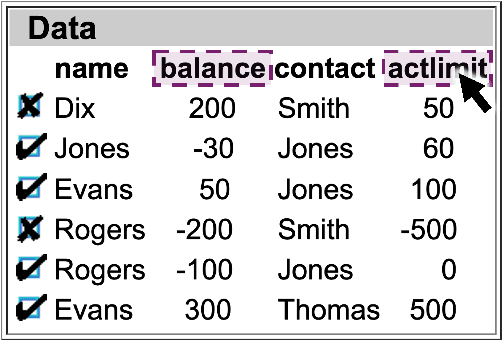

While XAI focuses on providing AI explanations to humans, can the reverse - humans explaining their judgments to AI - foster richer, synergistic human-AI systems? This paper explores various forms of human inputs to AI and examines how human explanations can guide machine learning models toward automated judgments and explanations that align more closely with human concepts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge