Synaptic Plasticity Dynamics for Deep Continuous Local Learning

Paper and Code

Nov 27, 2018

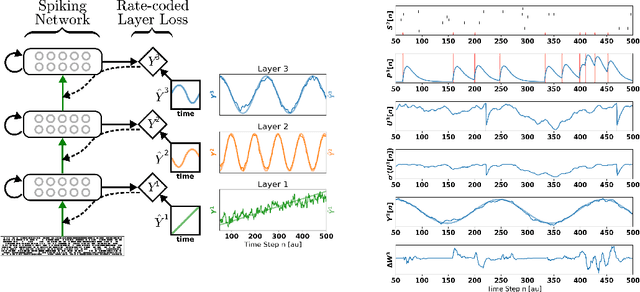

A growing body of work underlines striking similarities between spiking neural networks modeling biological networks and recurrent, binary neural networks. A relatively smaller body of work, however, discuss similarities between learning dynamics employed in deep artificial neural networks and synaptic plasticity in spiking neural networks. The challenge preventing this is largely due to the discrepancy between dynamical properties of synaptic plasticity and the requirements for gradient backpropagation. Here, we demonstrate that deep learning algorithms that locally approximate the gradient backpropagation updates using locally synthesized gradients overcome this challenge. Locally synthesized gradients were initially proposed to decouple one or more layers from the rest of the network so as to improve parallelism. Here, we exploit these properties to derive gradient-based learning rules in spiking neural networks. Our approach results in highly efficient spiking neural networks and synaptic plasticity capable of training deep neural networks. Furthermore, our method utilizes existing autodifferentation methods in machine learning frameworks to systematically derive synaptic plasticity rules from task-relevant cost functions and neural dynamics. We benchmark our approach on the MNIST and DVS Gestures dataset, and report state-of-the-art results on the latter. Our results provide continuously learning machines that are not only relevant to biology, but suggestive of a brain-inspired computer architecture that matches the performances of GPUs on target tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge