SYMPLEX: Controllable Symbolic Music Generation using Simplex Diffusion with Vocabulary Priors

Paper and Code

May 21, 2024

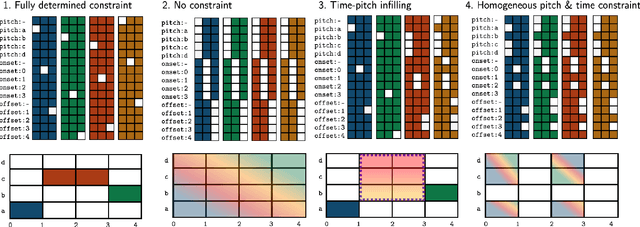

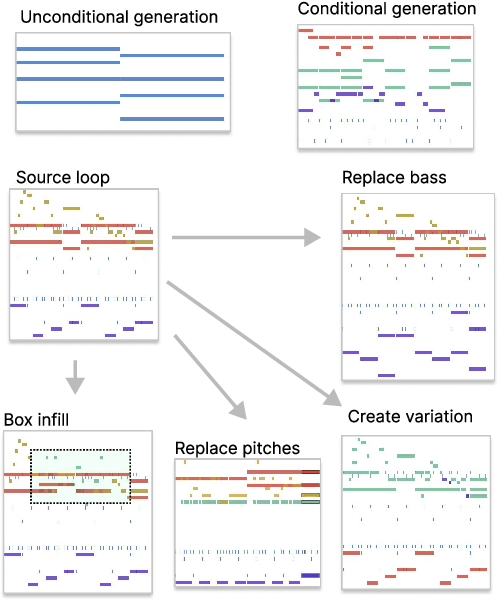

We present a new approach for fast and controllable generation of symbolic music based on the simplex diffusion, which is essentially a diffusion process operating on probabilities rather than the signal space. This objective has been applied in domains such as natural language processing but here we apply it to generating 4-bar multi-instrument music loops using an orderless representation. We show that our model can be steered with vocabulary priors, which affords a considerable level control over the music generation process, for instance, infilling in time and pitch and choice of instrumentation -- all without task-specific model adaptation or applying extrinsic control.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge