Symmetry-Aware Autoencoders: s-PCA and s-nlPCA

Paper and Code

Nov 10, 2021

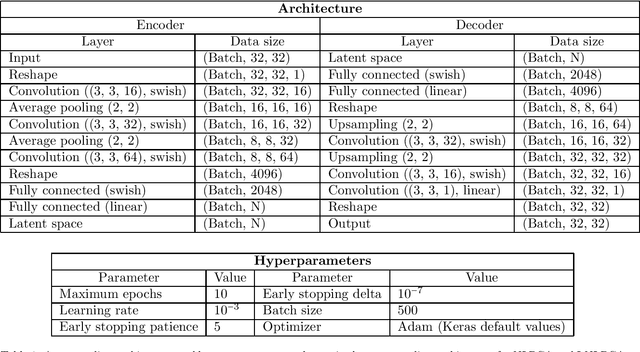

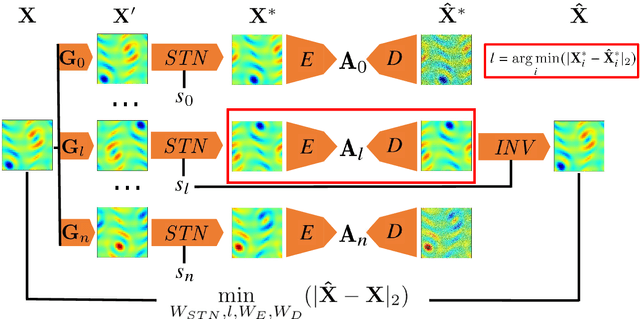

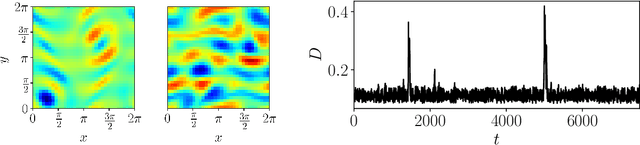

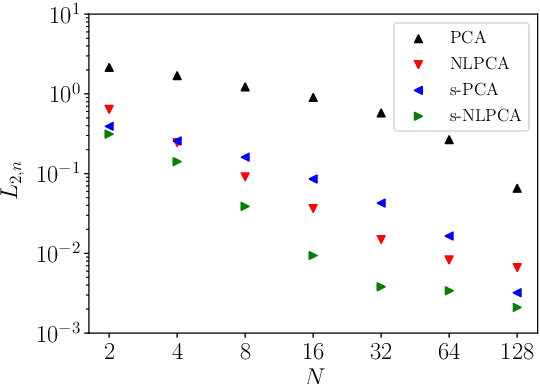

Nonlinear principal component analysis (nlPCA) via autoencoders has attracted attention in the dynamical systems community due to its larger compression rate when compared to linear principal component analysis (PCA). These model reduction methods experience an increase in the dimensionality of the latent space when applied to datasets that exhibit globally invariant samples due to the presence of symmetries. In this study, we introduce a novel machine learning embedding in the autoencoder, which uses spatial transformer networks and Siamese networks to account for continuous and discrete symmetries, respectively. The spatial transformer network discovers the optimal shift for the continuous translation or rotation so that invariant samples are aligned in the periodic directions. Similarly, the Siamese networks collapse samples that are invariant under discrete shifts and reflections. Thus, the proposed symmetry-aware autoencoder is invariant to predetermined input transformations dictating the dynamics of the underlying physical system. This embedding can be employed with both linear and nonlinear reduction methods, which we term symmetry-aware PCA (s-PCA) and symmetry-aware nlPCA (s-nlPCA). We apply the proposed framework to 3 fluid flow problems: Burgers' equation, the simulation of the flow through a step diffuser and the Kolmogorov flow to showcase the capabilities for cases exhibiting only continuous symmetries, only discrete symmetries or a combination of both.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge