Survey on Modeling Intensity Function of Hawkes Process Using Neural Models

Paper and Code

Apr 22, 2021

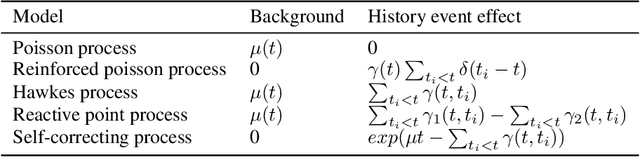

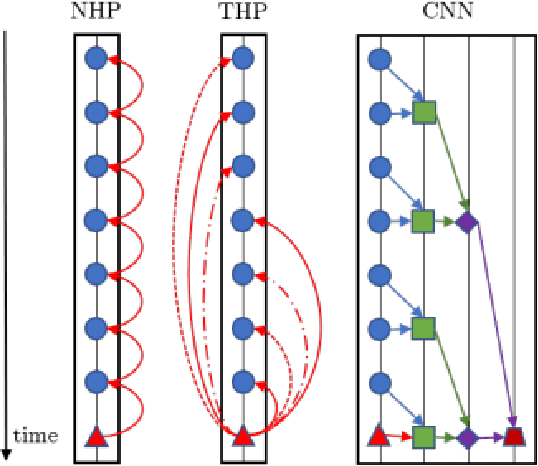

The event sequence of many diverse systems is represented as a sequence of discrete events in a continuous space. Examples of such an event sequence are earthquake aftershock events, financial transactions, e-commerce transactions, social network activity of a user, and the user's web search pattern. Finding such an intricate pattern helps discover which event will occur in the future and when it will occur. A Hawkes process is a mathematical tool used for modeling such time series discrete events. Traditionally, the Hawkes process uses a critical component for modeling data as an intensity function with a parameterized kernel function. The Hawkes process's intensity function involves two components: the background intensity and the effect of events' history. However, such parameterized assumption can not capture future event characteristics using past events data precisely due to bias in modeling kernel function. This paper explores the recent advancement using novel deep learning-based methods to model kernel function to remove such parametrized kernel function. In the end, we will give potential future research directions to improve modeling using the Hawkes process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge